1. Introduction

Welcome to the User Guide for WANdisco Fusion, version 2.14.

1.1. What is Fusion?

WANdisco Fusion is a software application that allows Hadoop deployments to replicate HDFS data between Hadoop clusters that are running different, even incompatible versions of Hadoop. It is even possible to replicate between different vendor distributions and versions of Hadoop.

1.1.1. Benefits

-

Virtual File System for Hadoop, compatible with all Hadoop applications.

-

Single, virtual Namespace that integrates storage from different types of Hadoop, including CDH, HDP, EMC Isilon, Amazon S3/EMRFS and MapR.

-

Storage can be globally distributed.

-

WAN replication using the WANdisco Fusion LiveData platform, delivering single-copy consistent HDFS data, replicated between far-flung data centers.

1.2. Using this guide

This guide describes how to install and administer WANdisco Fusion as part of a multi data center Hadoop deployment, using either on-premises or cloud-based clusters. This guide contains the following:

- Welcome

-

This chapter introduces this user guide and provides help with how to use it.

- Release Notes

-

Details the latest software release, covering new features, fixes and known issues to be aware of.

- Concepts

-

Explains the core concepts of how WANdisco Fusion operates, and how it fits into a Big Data environment.

- Installation (On-premises)

-

Covers the steps required to install and set up WANdisco Fusion into a On-premises environment.

- Installation (Cloud)

-

Covers the steps required to install and set up WANdisco Fusion into a Cloud-based environment.

- Operation

-

The steps required to run, reconfigure and troubleshoot WANdisco Fusion.

- Reference

-

Additional WANdisco Fusion documentation, including documentation for the available REST API.

1.3. Symbols in the documentation

In the guide we highlight types of information using the following call outs:

| The alert symbol highlights important information. |

| The STOP symbol cautions you against doing something. |

| Tips are principles or practices that you’ll benefit from knowing or using. |

| The KB symbol shows where you can find more information, such as in our online Knowledge base. |

1.4. Get support

See our online Knowledge base which contains updates and more information.

If you need more help raise a case on our support website.

We use terms that relate to the Hadoop ecosystem, WANdisco Fusion and WANdisco’s DConE replication technology. If you encounter any unfamiliar terms checkout the Glossary.

1.5. Local Language Support

WANdisco Fusion supports internationalization (i18n) and currently renders in the following languages.

Language |

code |

U.S. English |

en-US |

Simplified Chinese |

zh-CN |

During the command-line installation phase, the display language is set by the system’s locale. In use, the display language is determined through the user’s browser settings. Where language support is not available for your locale, then U.S. English will be displayed.

To handle non-ASCII characters in file and folder names, the LC_ALL environment variable must be set to en_US.UTF-8.

This can be edited in /etc/wandisco/fusion/ui/main.conf.

You must make sure that the locale is correctly installed.

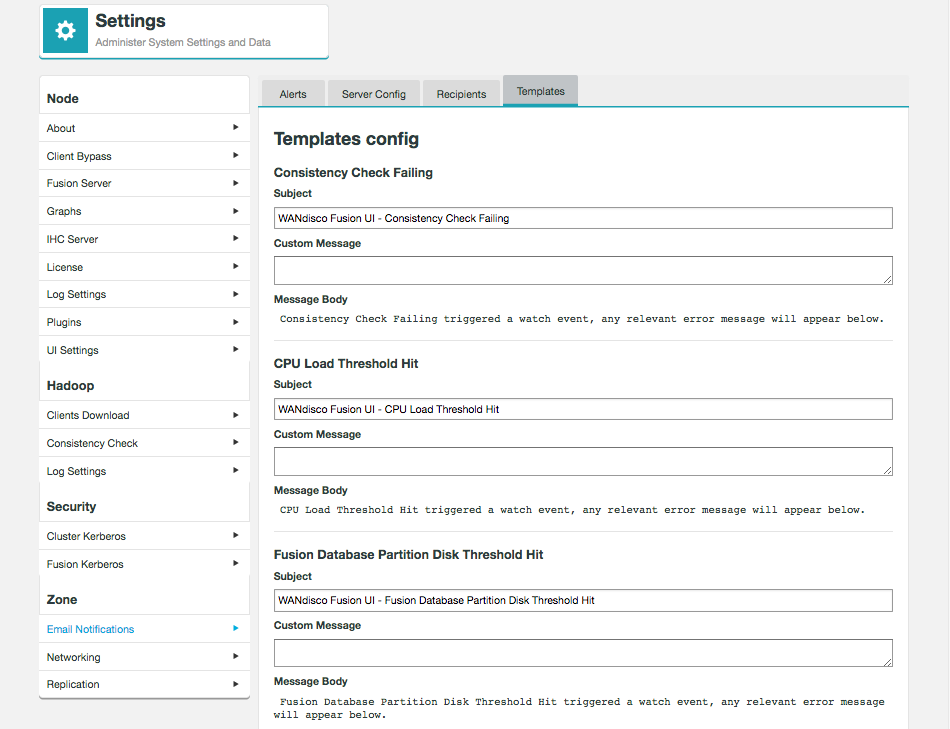

There are a few areas which are not automatically translated, for example Email templates, but these can be easily modified as described in the relevant sections.

1.6. Give feedback

If you find an error or if you think some information needs improving, raise a case on our support website or email docs@wandisco.com.

2. Release Notes

2.1. Version 2.14.0 Build 2675

4 July 2019

For the release notes and information on Known issues, please visit the Knowledge base - WANdisco Fusion 2.14.0 Build 2675 Release Notes.

3. Concepts

3.1. Product concepts

3.1.1. What is WANdisco Fusion

WANdisco Fusion shares data between two or more clusters. Shared data is replicated between clusters using DConE, WANdisco’s proprietary coordination engine. This isn’t a spin on mirroring data, every cluster can write into the shared data directories and the resulting changes are coordinated in real-time between clusters.

100% Reliability

LiveData uses a set of Paxos-based algorithms to continue to replicate even after brief networks outages, data changes will automatically catch up once connectivity between clusters is restored.

Below the coordination stream, actual data transfer is done as an asynchronous background process and doesn’t consume MapReduce resources.

Replication where and when you need

WANdisco Fusion supports Selective replication, where you control which data is replicated to particular clusters, based on your security or data management policies. Data can be replicated globally if data is available to every cluster or just one cluster.

The Benefits of WANdisco Fusion

-

Ingest data to any cluster, sharing it quickly and reliably with other clusters. Removing fragile data transfer bottlenecks, and letting you process data at multiple places improving performance and getting you more utilization from backup clusters.

-

Support a bimodal or multimodal architecture to enable innovation without jeopardizing SLAs. Perform different stages of the processing pipeline on the best cluster. Need a dedicated high-memory cluster for in-memory analytics? Or want to take advantage of an elastic scale-out on a cheaper cloud environment? Got a legacy application that’s locked to a specific version of Hadoop? WANdisco Fusion has the connections to make it happen. And unlike batch data transfer tools, WANdisco Fusion provides fully consistent data that can be read and written from any site.

-

Put away the emergency pager. If you lose data on one cluster, or even an entire cluster, WANdisco Fusion has made sure that you have consistent copies of the data at other locations.

-

Set up security tiers to isolate sensitive data on secure clusters, or keep data local to its country of origin.

-

Perform risk-free migrations. Stand up a new cluster and seamlessly share data using WANdisco Fusion. Then migrate applications and users at your leisure, and retire the old cluster whenever you’re ready.

3.2. WANdisco Fusion architecture

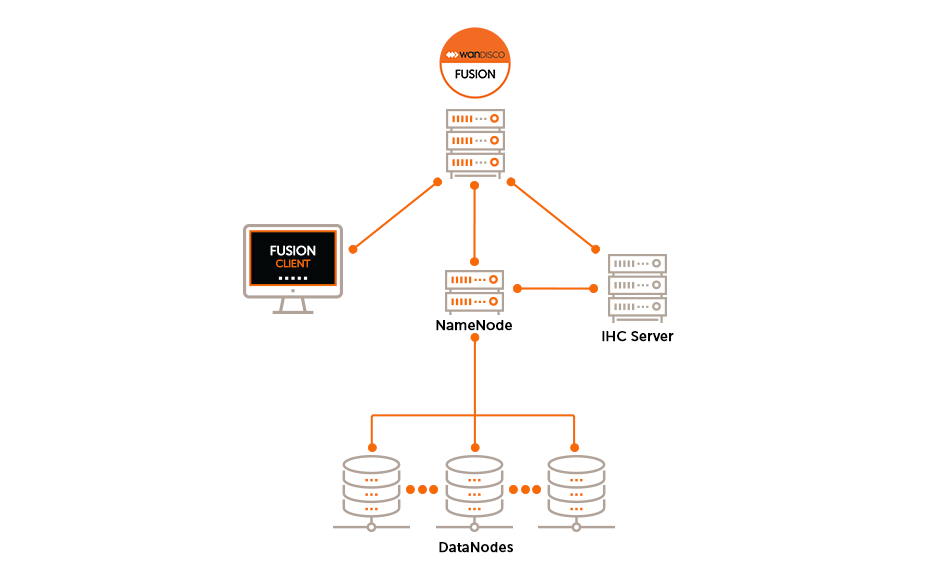

3.2.1. Example Workflow

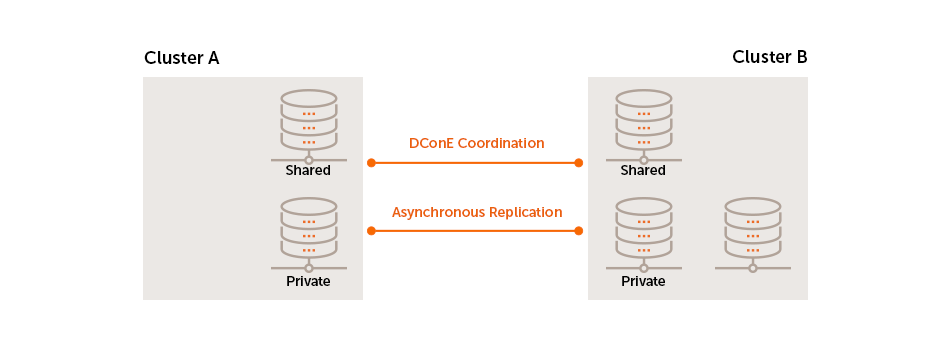

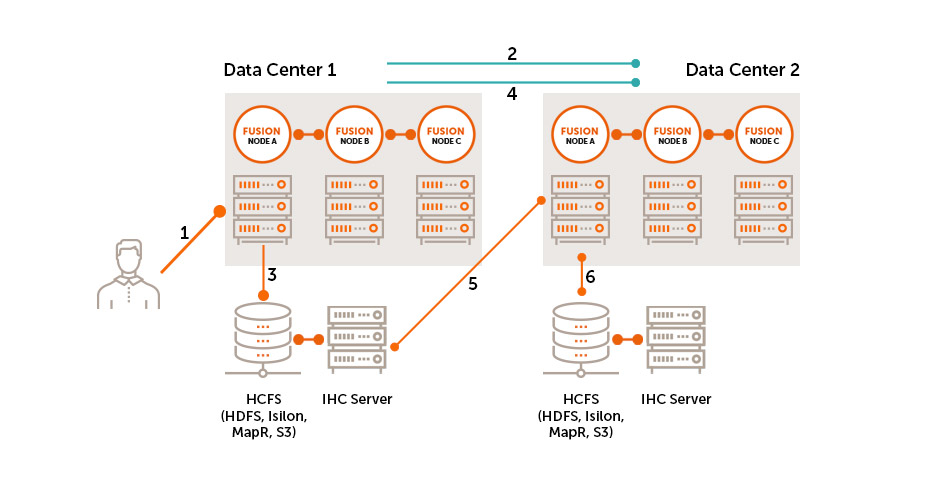

The following diagram presents a simplified workflow for WANdisco Fusion, which illustrates a basic use case and points to how WANdisco’s distributed coordination engine (DConE) is implemented to overcome the challenges of coordination.

-

User makes a request to create or change a file on the cluster.

-

WANdisco Fusion coordinates File Open to the external cluster.

-

File is added to underlying storage.

-

WANdisco Fusion coordinates at configurable write increments and File Close with other clusters.

-

WANdisco Fusion server at remote cluster pulls data from IHC server on source cluster.

-

WANdisco Fusion server at remote site writes data to its local cluster.

3.2.2. Guide to node types

A Primer on Paxos

Replication networks are composed of a number of nodes, each node takes on one of a number of roles:

Acceptors (A)

The Acceptors act as the gatekeepers for state change and are collected into groups called Quorums. For any proposal to be accepted, it must be sent to a Quorum of Acceptors. Any proposal received from an Acceptor node will be ignored unless it is received from each Acceptor in the Quorum.

Proposers (P)

Proposer nodes are responsible for proposing changes, via client requests, and aims to receive agreement from a majority of Acceptors.

Learners (L)

Learners handle the actual work of replication. Once a Client request has been agreed on by a Quorum the Learner may take the action, such as executing a request and sending a response to the client. Adding more learner nodes will improve availability for the processing.

Distinguished Node

It’s common for a Quorum to be a majority of participating Acceptors. However, if there’s an even number of nodes within a Quorum this introduces a problem: the possibility that a vote may tie. To handle this scenario a special type of Acceptor is available, called a Distinguished Node. This machine gets a slightly larger vote so that it can break 50/50 ties.

Nodes in Fusion

- APL

-

Acceptor - the node will vote on the order in which replicated changes will play out.

Proposer - the node will create proposals for changes that can be applied to the other nodes.

Learner - the node will receive replication traffic that will synchronize its data with other nodes. - PL

-

Proposer - the node will create proposals for changes that can be applied to the other nodes.

Learner - the node will receive replication traffic that will synchronize its data with other nodes. - Distinguished Node

-

Acceptor - the distinguished node is used in situations where there is an even number of nodes, a configuration that introduces the risk of a tied vote. The Distinguished Node’s bigger vote ensures that it is not possible for a vote to become tied.

3.2.3. Zones

A Zone represents the file system used in a standalone Hadoop cluster. Multiple Zones could be from separate clusters in the same data center, or could be from distinct clusters operating in geographically-separate data centers that span the globe. WANdisco Fusion operates as a distributed collection of servers. While each WANdisco Fusion server always belongs to only one Zone, a Zone can have multiple WANdisco Fusion servers (for load balancing and high availability). When you install WANdisco Fusion, you should create a Zone for each cluster’s file system.

3.2.4. Memberships

WANdisco Fusion is built on WANdisco’s patented DConE active-active replication technology. DConE sets a requirement that all replicating nodes that synchronize data with each other are joined in a "membership". Memberships are coordinated groups of nodes where each node takes on a particular role in the replication system.

In versions of WANdisco Fusion prior to 2.11, memberships were manually created using the UI. Now all required combinations of zones are automatically created, making the creation of Replication Rules simpler. You can however still interact with memberships if needed through the API.

Creating resilient Memberships

WANdisco Fusion is able to maintain HDFS replication even after the loss of WANdisco Fusion nodes from a cluster. However, there are some configuration rules that are worth considering:

Rule 1: Understand Learners and Acceptors

The unique Active-Active replication technology used by WANdisco Fusion is an evolution of the Paxos algorithm, as such we use some Paxos concepts which are useful to understand:

-

Learners:

Learners are the WANdisco Fusion nodes that are involved in the actual replication of Namespace data. When changes are made to HDFS metadata these nodes raise a proposal for the changes to be made on all the other copies of the filesystem space on the other data centers running WANdisco Fusion within the membership.

Learner nodes are required for the actual storage and replication of hdfs data. You need a learner node where ever you need to store a copy of the shared hdfs data.

-

Acceptors:

All changes being made in the replicated space at each data center must be made in exactly the same order. This is a crucial requirement for maintaining synchronization. Acceptors are nodes that take part in the vote for the order in which proposals are played out.

Acceptor Nodes are required for keeping replication going. You need enough Acceptors to ensure that agreement over proposal ordering can always be met, even after accounting for possible node loss. For configurations where there are a an even number of Acceptors it is possible that voting could become tied. For this reason it is possible to make an Acceptor node into a tie-breaker which has slightly more voting power so that it can outvote another single Acceptor node.

Rule 2: Replication groups should have a minimum membership of three learner nodes

Two-node clusters (running two WANdisco Fusion servers) are not fault tolerant, you should strive to replicate according to the following guideline:

-

The number of learner nodes required to survive population loss of N nodes = 2N+1

where N is your number of nodes.So in order to survive the loss of a single WANdisco Fusion server equipped datacenter you need to have a minimum of 2x1+1= 3 nodes

In order to keep on replicating after losing a second node you need 5 nodes.

Rule 3: Learner Population - resilience vs rightness

-

During the installation of each of your nodes you may configure the Content Node Count number, this is the number of other learner nodes in the replication group that need to receive the content for a proposal before the proposal can be submitted for agreement.

Setting this number to 1 ensures that replication won’t halt if some nodes are behind and have not received replicated content yet. This strategy reduces the chance that a temporary outage or heavily loaded node will stop replication, however, it also increases the risk that namenode data will go out of sync (requiring admin-intervention) in the event of an outage.

Rule 4: 2 nodes per site provides resilience and performance benefits

Running with two nodes per site provides two important advantages.

-

Firstly it provides every site with a local hot-backup of the namenode data.

-

Enables a site to load-balance namenode access between the nodes which can improve performance during times of heavy usage.

-

Providing the nodes are Acceptors, it increases the population of nodes that can form agreement and improves resilience for replication.

3.2.5. Replication Frequently Asked Questions

What stops a file replication between zones from failing if an operation such as a file name change is done on a file that is still transferring to another zone?

Operations, such as a rename only affects metadata, so long as the file’s underlying data isn’t changed, the operation to transfer the file will complete. Only then will the rename operation play out. When you start reading a file for the first time you acquire all the block locations necessary to fulfill the read, at this point metadata changes won’t halt the transfer of the file to another zone.

3.2.6. Agreement recovery in WANdisco Fusion

This section explains why, when monitoring replication recovery, the execution of agreements on the filesystem may occur out of the expected order on the catching-up node.

In the event that the WAN link between clusters is temporarily dropped, it may be noticed that when the link returns, there’s a brief delay before the reconnected zones are back in sync and it may appear that recovery is happening with filesystem operations being applied out of order, in terms of the coordinated operations associated with each agreement.

This behaviour can be explained as follows:

-

The "non-writer" nodes review the coordinated agreements to determine which agreements the current writer has processed and which agreements they can remove from their own store, where they are kept in case the writer node fails and they are selected to take over.

Why are proposals seemingly being delivered out-of-order?

This is related and why you will see coordinated operation’s written "out-of-order" in the filesystem. Internally within Fusion, "non-interfering" agreements are processed in parallel so that throughput is not hindered by operations that take a long time, such as a large file copy.

Example

Consider the following global sequence, where /repl1 is the replicated directory:

1. Copy 10TB file to /repl1/dir1/file1

2. Copy 10TB file to /repl1/dir2/file1

3. Chown /repl/dir1

-

Agreements 1 and 2 may be executed in parallel since they do not interfere with one-another.

-

However, agreement 3 must wait for agreement 1 to complete before it can be applied to the filesystem.

-

If agreement 2 completes before 1 then its operation will be recorded before the preceding agreement and look on the surface like an out-of-order filesystem operation.

Under the hood

DConE’s Output Proposal Sequence (OPS) delivers agreed values in strict sequence, one-at-a-time, to an application. Applying these values to the application state in the sequence delivered by the OPS ensures the state is consistent with other replicas at that point in the sequence. However, an optimization can be made: if two or more values do not interfere with one another they may be applied in parallel without adverse effects. This parallelization has several benefits, for example:

-

It may increase the rate of agreed values applied to the application state if there are many non-interfering agreements;

-

It avoids an agreement that takes a long time to complete (such as a large file transfer) from blocking later agreements that aren’t dependent on that agreement having completed.

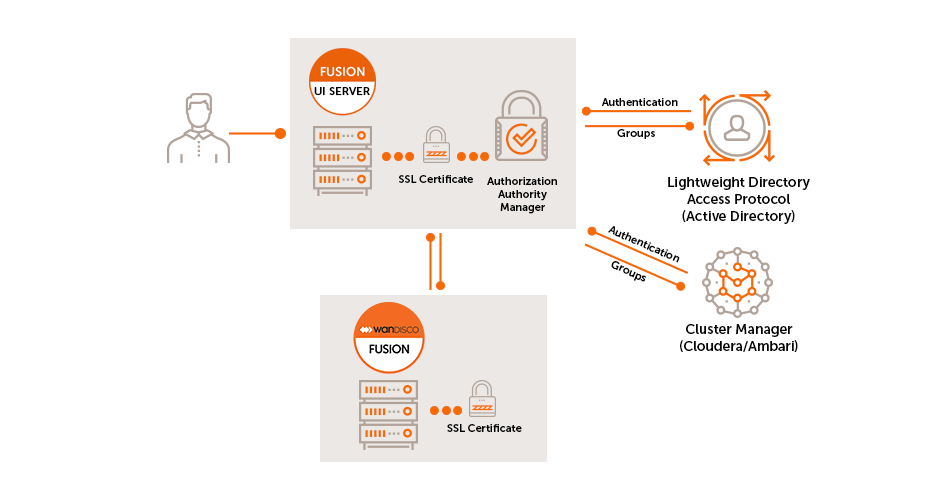

3.2.7. Authorization and Authentication

Overview

The Fusion user interface provides an LDAP/AD connection, allowing Fusion users to be managed through a suitable Authorization Authority, such as an LDAP, Active Directory or Cloudera Manager-based system. Read more about connecting to LDAP/Active Directory.

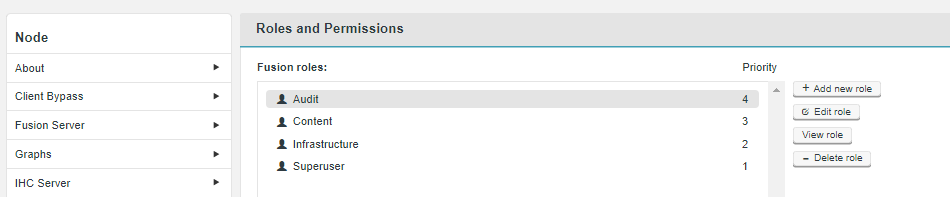

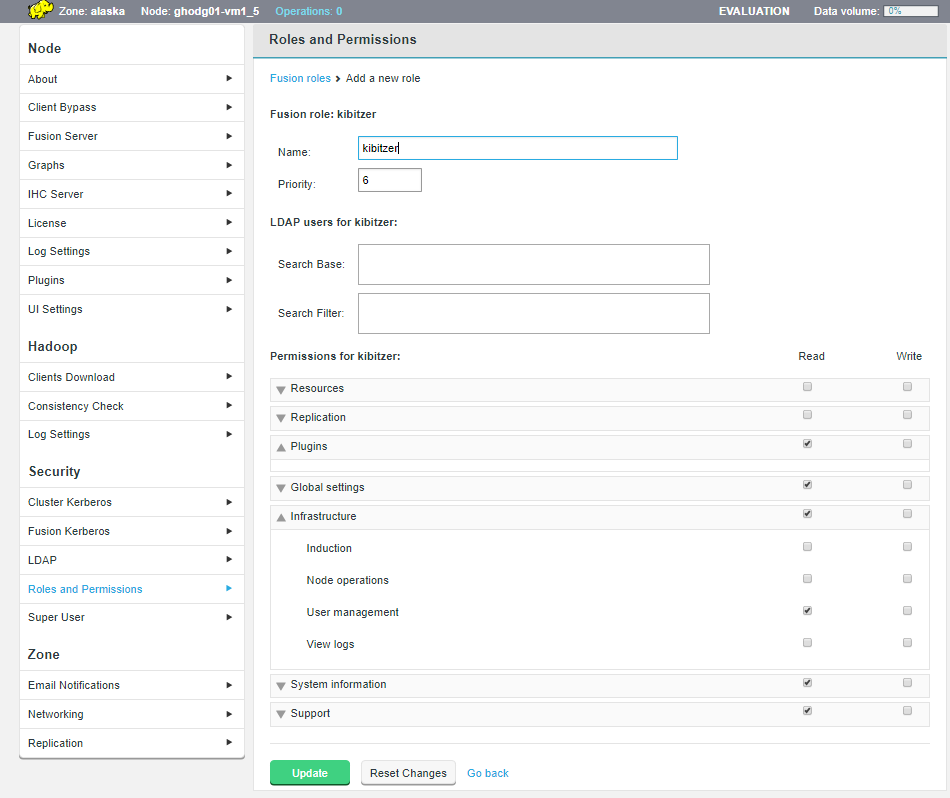

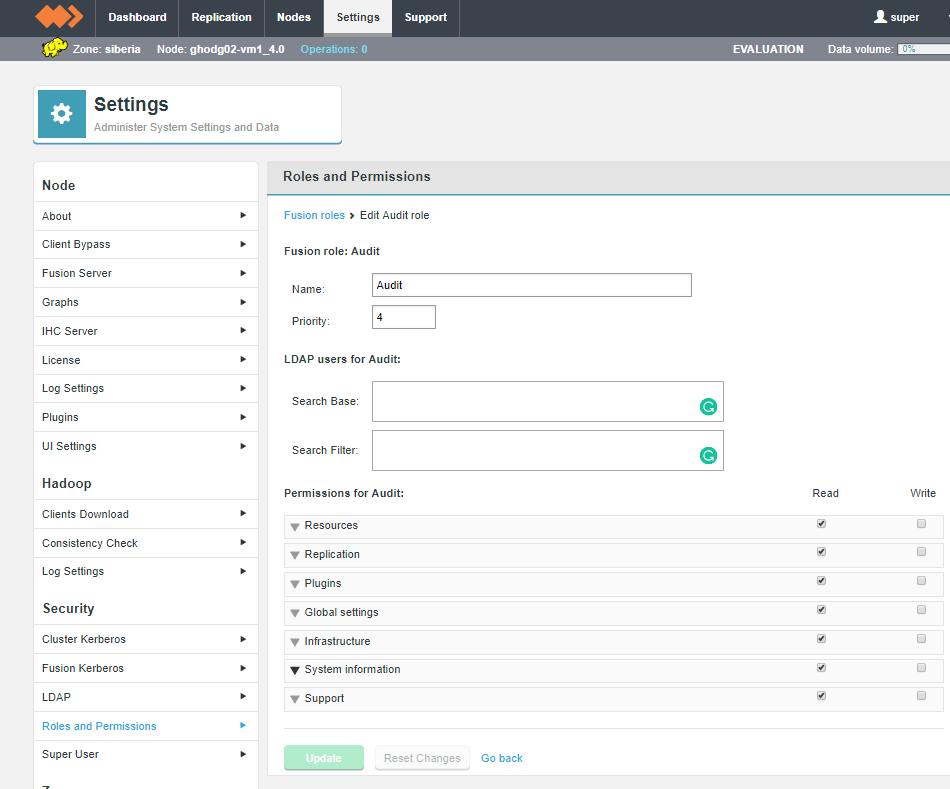

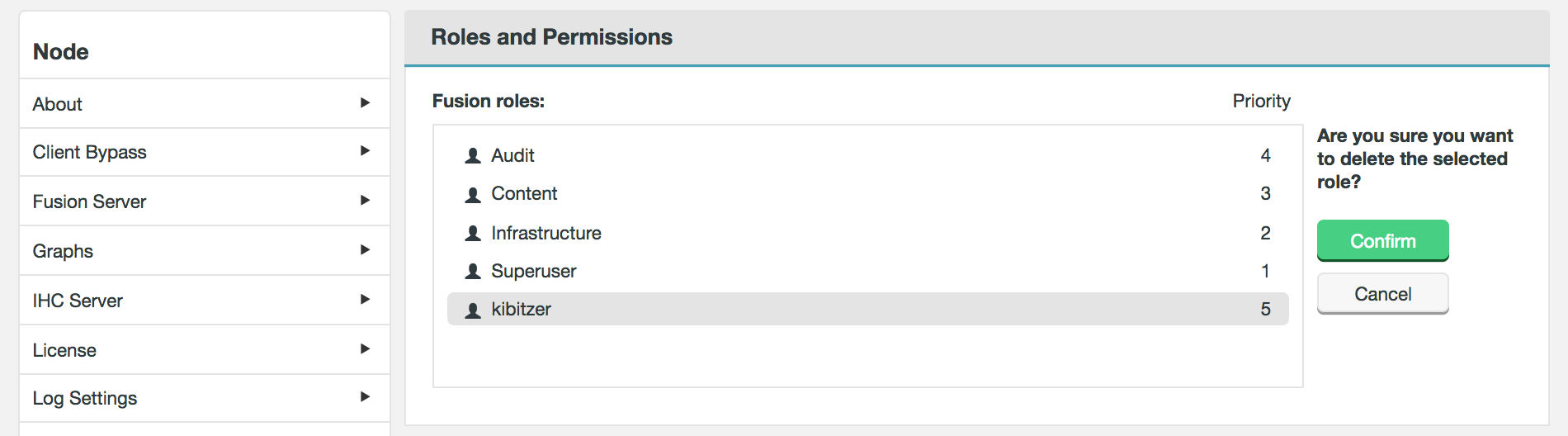

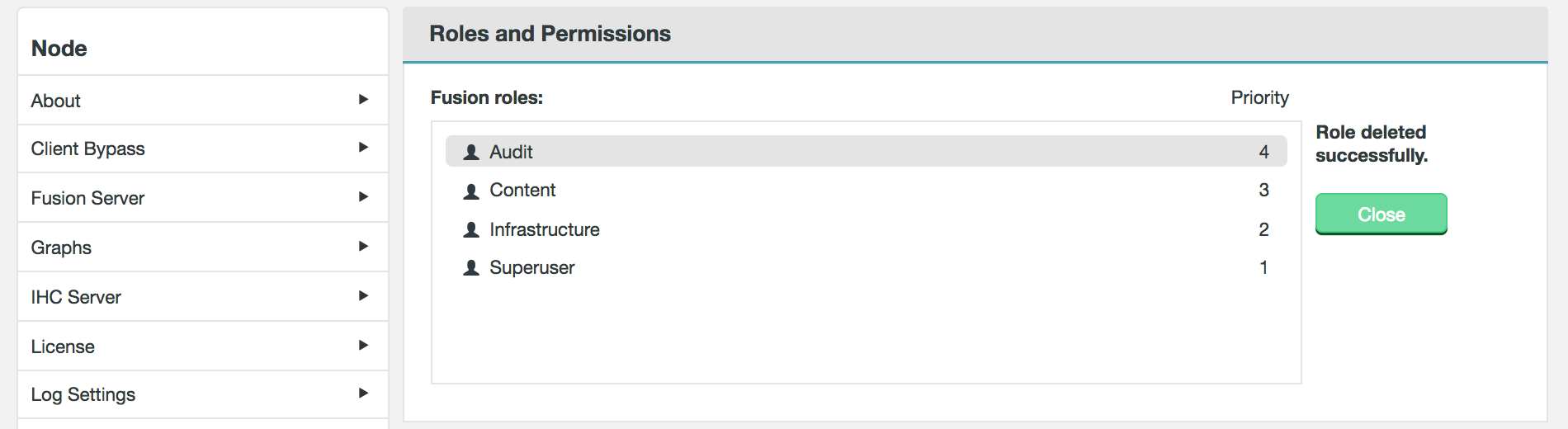

Users can have their access to Fusion fine-tuned using assigned roles. Each Fusion user can be assigned one or more roles through the organization’s authorization authority. When the user logs into Fusion, their account’s associated roles are checked and their role with the highest priority is applied to their access.

Roles can be mapped to Fusion’s complete set of functions and features, so that user access can be as complete or as limited as your organization’s guidelines dictate. A set of roles are provided by default, you read about these in the Roles and Permissions, you can, instead, create your own user roles and limit permissions to specific Fusion functions.

The Fusion UI server uses a _User Authorization abstraction which incorporates the following sub-systems:

Authorization Authority Manager

This component is responsible for mapping the authenticated user to one or more roles based on their presence in the "Authorization Authority".

Authorization Authority

This refers to the system that stores Authorization mapping information. This could be Active Directory, LDAP, Cloudera Manager, etc.

The Authorization Authority Manager is responsible for:

-

Managing connectivity to the authority

-

Mapping authority grouping to Fusion Roles

-

Syncing changes in user authorization

-

Invalidating sessions

| If an Authorization Authority is not connected then only the standard Fusion "admin" account will be able to access the Fusion user interface. |

Role Manager

The Role Manager defines the available user roles and maps them to sets of feature toggles.

-

Feature Toggle presents each of the UI features that a user can interact with (both read and write actions)

-

The set of Feature toggles is static and mapped into the system per release

-

The set of Features mapped to each role can be managed dynamically in the running system

-

Storage of this data will be in the underlying (replicated) file system. Thus enabling all Fusion nodes in a zone to take advantage of the same configuration

-

-

A default set of roles, each with a suitable set of mappings that match expected user types. See

API filter

Calls to Fusion’s REST APIs are guarded by a filter which checks the client calls against the roles specified and decides whether the call is authorized. The filter uses a 2 stage check:

-

Check that the supplied client token is valid and get the role(s) which it maps to

-

In the case that no token is supplied a 401 error should be returned - which should be interpreted as the need for the client to log in with their credentials and generate a token.

-

-

Check that the role for the given token is valid for the call being made (by checking against the permissions for the relevant feature).

-

If it is not valid then a 403 error should be returned.

-

3.3. Deployment models

The following deployment models illustrate some of the common use cases for running WANdisco Fusion.

3.3.1. Analytic off-loading

In a typical on-premises Hadoop cluster, data ingest, analytic jobs all run through the same infrastructure where some activities impose a load on the cluster that can impact other activities. WANdisco Fusion allows you to divide up the workflow across separate environments, which lets you isolate the overheads associated with some events. You can ingest in one environment while using a different environment where capacity is provided to run the analytic jobs. You get more control over each environment’s performance.

-

You can ingest data from anywhere and query that at scale within the environment.

-

You can ingest data on premises (or where ever the data is generated) and query it at scale in another optimized environment, such as a cloud environment with elastic scaling that can be spun up only when queries jobs are queued. In this model, you may ingest data continuously but you don’t need to run a large cluster 24-hours-per-day for queries jobs.

3.3.2. Multi-stage jobs across multiple environments

A typical Hadoop workflow might involve a series of activities, ingesting data, cleaning data and then analyzing the data in a short series of steps. You may be generating intermediate output to be run against end-stage reporting jobs that perform analytical work, running all these work streams on a single cluster could require a lot of careful coordination with different types of workloads, conducting multi-stage jobs. This is a common chain of query activities for Hadoop applications, where you might ingest raw data, refine and augment it with other information, then eventually run analytic jobs against your output on a periodic basis, for reporting purposes, or in real-time.

In a replicated environment, however, you can control where those job stages are run. You can split this activity across multiple clusters to ensure the queries jobs needed for reporting purposes will have access to the capacity necessary to ensure that they run under within SLAs. You also can run different types of clusters to make more efficient use of the overall chain of work that occurs in a multi-stage job environments. You could have a cluster running that is tweaked and tuned for most efficient ingest, while running a completely different kind of environment that is tuned for another task, such as the end-stage reporting jobs that run against processed and augmented data. Running with Live data across multiple environments allows you to run each different type of activity in the most efficient way.

3.3.3. Migration

WANdisco Fusion allows you to move both the Hive data, stored in HCFS and associated Hive metadata from an on-premises cluster over to cloud-based infrastructure. There’s no need to stop your cluster activity; the migration can happen without impact to your Hadoop operations.

3.3.4. Disaster Recovery

As data is replicated between nodes on a continuous basis, WANdisco Fusion is an ideal solution for protecting your data from loss. If a disaster occurs, there’s no complicated switchover as the data is always operational.

3.3.5. Hadoop to S3

WANdisco Fusion can be used to migrate or replicate data from a Hadoop platform to S3, or S3 compatible, storage. WANdisco’s S3 plugin provides:

-

LiveData transactional replication from the on-premise cluster to an S3 bucket

-

Consistency check of data between the Hadoop platform and the S3 bucket

-

Point-in-time batch operations to return to consistency from Hadoop to S3

-

Point-in-time batch operations to return to consistency from S3 back to Hadoop

However it does not provide any facility for LiveData transactional replication from S3 to Hadoop.

3.4. Working in the Hadoop ecosystem

This section covers the final step in setting up a WANdisco Fusion cluster, where supported Hadoop applications are plugged into WANdisco Fusion’s synchronized distributed namespace. It won’t be possible to cover all the requirements for all the third-party software covered here, we strongly recommend that you get hold of the corresponding documentation for each Hadoop application before you work through these procedures.

3.4.1. Hadoop File System Configuration

The following section explains how Fusion interacts with and replicates to your chosen file system.

| To be clear, this is actually Hadoop, not Fusion configuration. If you’re not familar with how the Hadoop File System configuration works, you may need to review your Hadoop documentation. |

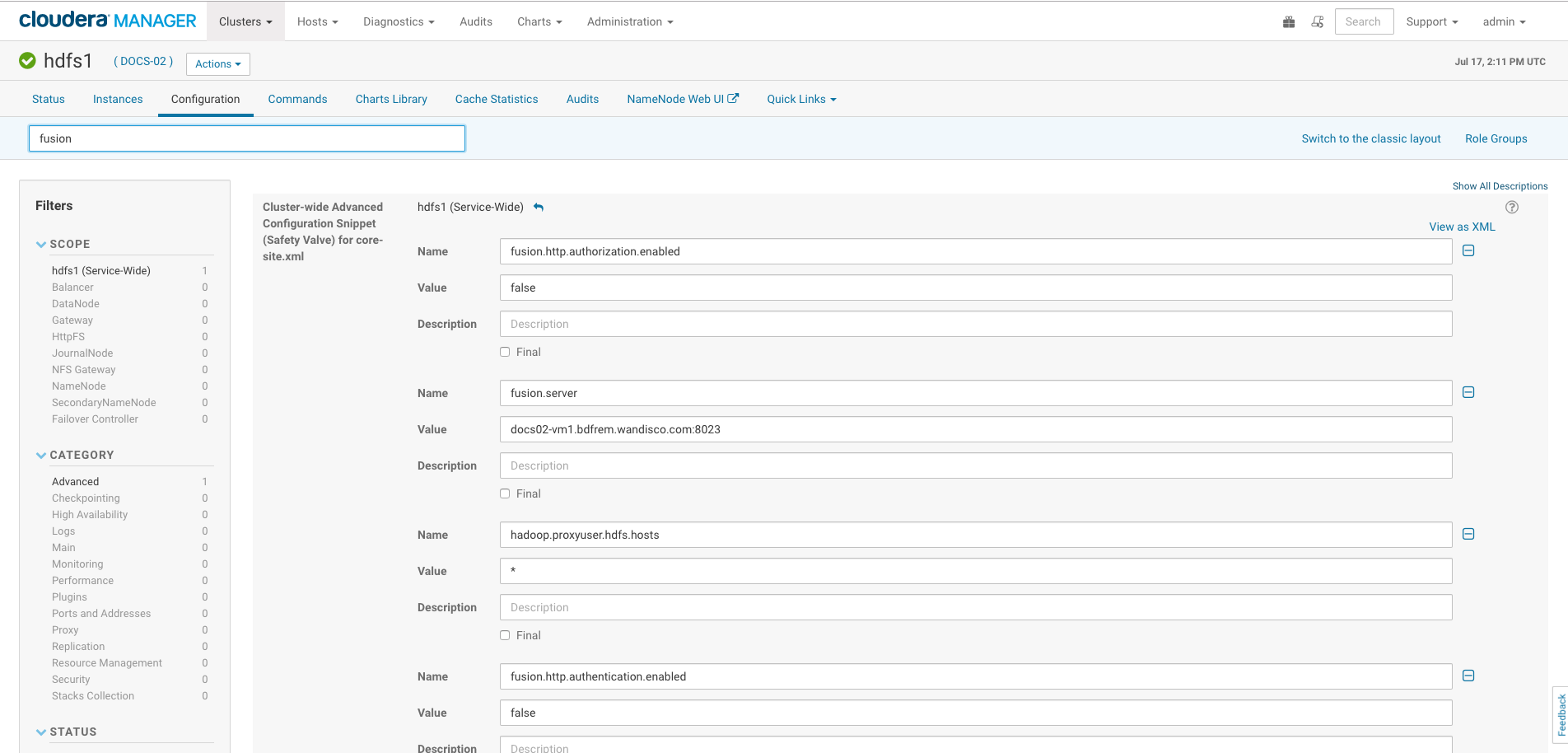

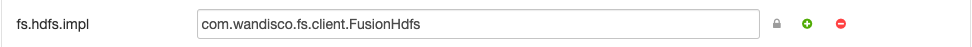

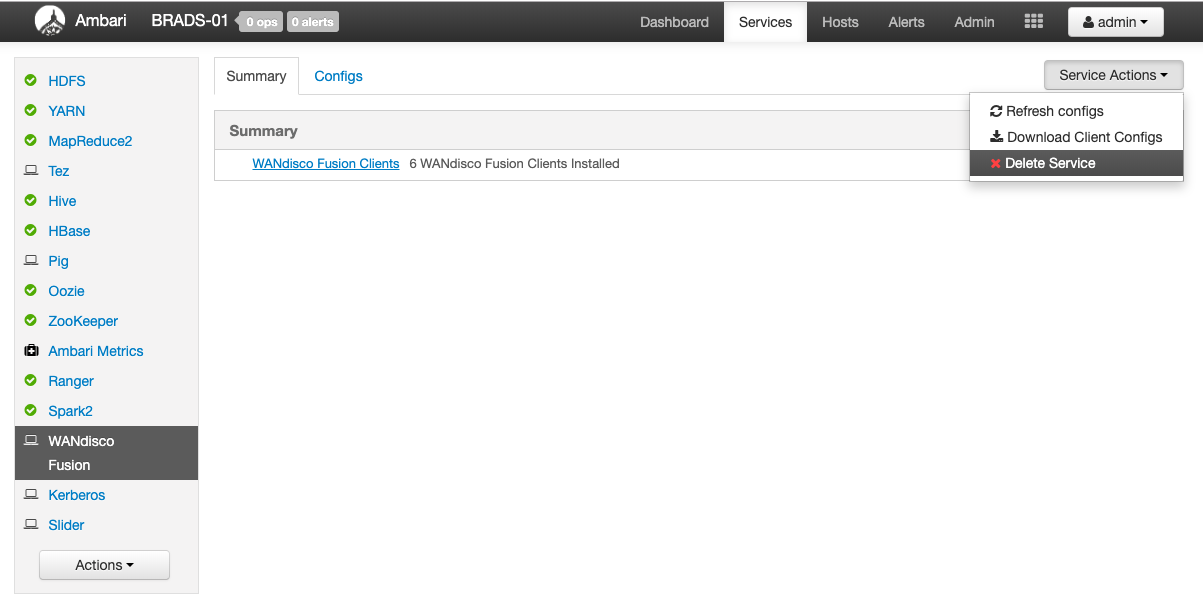

There are several options available for configuring Hadoop clients to work with Fusion, with different configurations suiting different types of deployment. Configuration can be done during installation or as an in-production configuration change, through the Fusion UI, or even manually by amending a cluster’s core-site, via the Hadoop manager. This latter option can be used to make temporary configuration changes to assist in troubleshooting or as part of a maintenance or DR operation.

-

The Hadoop file system looks at either your input URI or defaultFs for a scheme, say "example".

-

It then looks for the Implementation property, i.e.,

fs.example.implto instantiate a filesystem. -

In order to instantiate Fusion, the implementation file needs to match with a compatible implementation, e.g.

fs.example.impl = hcfs. -

Fusion now uses the

fs.underlyingClassto identify the actual underlying filesystem, and therefore understand that "example" really maps to example-FileSystem.

URI (Universal Resource Identifier)

Client access to Fusion is chiefly driven by URI selection. The Hadoop URI consists of a scheme, authority, and path, with the scheme and authority, together, determining the FileSystem implementation. The default is hdfs and is referred to by fs.hdfs.impl, which points to the Java class that handles references to files under the hdfs:// prefix. This prefix is entirely arbitrary, and you could use any prefix that you want, providing that it points to an appropriate ".impl." that will handle the filesystem commands that you need.

| MapR must use WANdisco’s native "fusion:///" URI, instead of the default hdfs:///. |

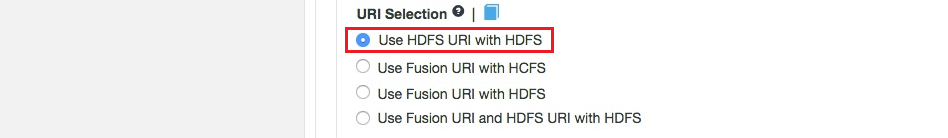

Fusion (on-premises) provides 4 options for URI selection:

HDFS URI with HDFS

This option is available for deployments where the Hadoop applications support neither the WANdisco Fusion URI nor the HCFS standards. WANdisco Fusion operates entirely within HDFS.

This configuration will not allow paths with the fusion:/// uri to be used; only paths starting with hdfs:/// or no scheme that correspond to a mapped path will be replicated. The underlying file system will be an instance of the HDFS DistributedFileSystem, which will support applications that aren’t written to the HCFS specification.

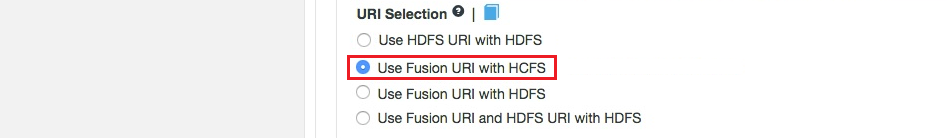

Fusion URI with HCFS

This is the default option that applies if you don’t enable Advanced Options. When selected, you need to use fusion:// for all data that must be replicated over an instance of the Hadoop Compatible File System. If your deployment includes Hadoop applications that are either unable to support the Fusion URI or are not written to the HCFS specification, this option will not work.

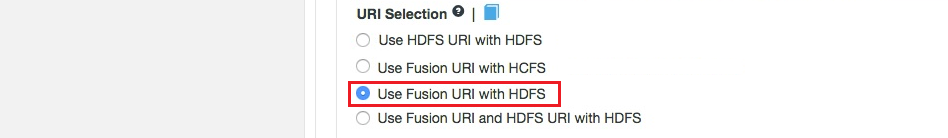

Fusion URI with HDFS

This differs from the default in that while the WANdisco Fusion URI is used to identify data to be replicated, the replication is performed using HDFS itself. This option should be used if you are deploying applications that can support the WANdisco Fusion URI but not the Hadoop Compatible File System.

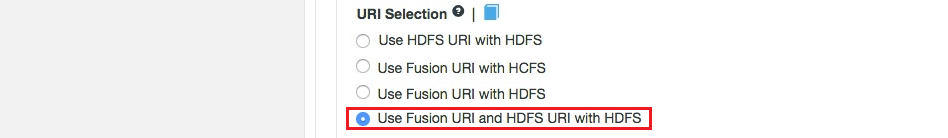

Fusion URI and HDFS URI with HDFS

This "mixed mode" supports all the replication schemes (fusion://, hdfs:// and no scheme) and uses HDFS for the underlying file system, to support applications that aren’t written to the HCFS specification.

Fusion (cloud) provides 2 options for URI selection:

fusion URI with HCFS

This differs from the default in that while the WANdisco Fusion URI is used to identify data to be replicated, the replication is performed using HDFS itself. This option should be used if you are deploying applications that can support the WANdisco Fusion URI but not the Hadoop Compatible File System.

Use this option if you are intending to limit replication to the fusion:// URI.

Platforms that must run with Fusion URI with HCFS |

Azure |

LocalFS |

OnTapLocalFs |

UmanagedBigInsights |

UnmanagedSwift |

UnmanagedGoogle |

UnmanagedS3 |

UnmanagedEMR |

MapR |

Default fs

Uses the default Filesystem. Use this option if you want everything to be replicated, using the settings of your implementation and defaultFS properties.

Examples:

-

ADL to use default fs would require fs.adl.impl instead of fs.fusion.impl

-

WASB to use default fs would require fs.wasb.impl instead of fs.fusion.impl

|

Implementation property

The impl (Implementation) property is the abstract FileSystem implementation that will be used. <property> <name>fs.<implementation-name>.impl</name> <value>valid.impl.class.for.an.hcfs.implementation</value> </property> |

DefaultFS property

Hadoop has a configuration property fs.defaultFs, that determines which scheme will be used when an application interacting with the FileSystem API doesn’t specify a file system type (e.g. just using a URI like /path/to/file.)

For example, fs.defaultFs is set to hdfs:<namenode>:<port> in an HDFS cluster, and adl://<account>.auredatalakestore.net in an HDInsight cluster using ADLS by default. The selection of defaultFs is going to be driven by your cluster’s storage file system.

fs.fusion.underlyingFs

The address of the underlying filesystem, which might be the same as the fs.defaultFS. However, in cases like EMRFS, the fs.defaultFS points to a local HDFS built on the instance storage which is temporary, with persistent data being stored in S3. In this case S3 storage is likely to be the fs.fusion.underlyingFs. There’s no default value, but it must be present.

The underlying filesystem needs a URI like hdfs://namenode:port, or adl://<Account Name>.azuredatalakestore.net/, and there needs to be a valid fs.<scheme>.impl setting in place for whatever that underlying file system is.

fs.fusion.underlyingFsClass

The name of the implementation class for the underlying file system specified with fs.fusion.underlyingFs. Fusion expects particular implementation classes to be associated with common URI schemes (e.g. S3://, hdfs://), used by Hadoop clients when accessing the file system.

org.apache.hadoop.fs.azure.NativeAzureFileSystem$Secure

would be used if

fs.fusion.underlyingFs is set to adls://<Account Name>.azuredatalakestore.net

If you use alternative implementations classes for the scheme configured in fs.fusion.underlyingFs, you need to specify the name of the implementation for the underlying file system with this item. You also need to specify the implementation if using a URI scheme that is not one of those known to the defaults.

In turn, Fusion only gets involved if the implementation class for the file system used by an application is one of the implementations of the File System API - i.e.

com.wandisco.fs.client.FusionHcfs

or

com.wandisco.fs.client.FusionHdfs.

So if the fs.whatever.impl refers to either of those then you need to have the correct settings for fs.fusion.underlyingFs and fs.fusion.underlyingFsClass (but the latter has defaults that should work, and which are determined by the text used for the scheme.)

If you are using com.wandisco.fs.client.FusionHdfs, fs.fusion.underlyingFs must be an hdfs URI, like hdfs://<namenode>:<port>, and fs.hdfs.impl must refer to org.apache.hadoop.hdfs.DistributedFileSystem. You will need to use com.wandisco.fs.client.FusionHcfs if the underlying file system is anything other than HDFS.

3.4.2. Hive

This guide integrates WANdisco Fusion with Apache Hive, it aims to accomplish the following goals:

-

Replicate Hive table storage.

-

Use fusion URIs as store paths.

-

Use fusion URIs as load paths.

-

Share the Hive metastore between two clusters.

Prerequisites

-

Knowledge of Hive architecture.

-

Ability to modify Hadoop site configuration.

-

WANdisco Fusion installed and operating.

Replicating Hive Storage via fusion:///

The following requirements come into play if you have deployed WANdisco Fusion using with its native fusion:/// URI.

In order to store a Hive table in WANdisco Fusion you specify a WANdisco Fusion URI when creating a table. E.g. consider creating a table called log that will be stored in a replicated directory.

CREATE TABLE log(requestline string) stored as textfile location 'fusion:///repl1/hive/log';. Note: Replicating table storage without sharing the Hive metadata will create a logical discrepancy in the Hive catalog. For example, consider a case where a table is defined on one cluster and replicated on the HCFS to another cluster. A Hive user on the other cluster would need to define the table locally in order to make use of it.

|

Don’t use namespace

Make sure you don’t use the namespace name e.g. use fusion:///user/hive/log, not fusion://nameserviceA/user/hive/log.

|

Replicated directories as store paths

It’s possible to configure Hive to use WANdisco Fusion URIs as output paths for storing data, to do this you must specify a Fusion URI when writing data back to the underlying Hadoop-compatible file system (HCFS). For example, consider writing data out from a table called log to a file stored in a replicated directory:

INSERT OVERWRITE DIRECTORY 'fusion:///repl1/hive-out.csv' SELECT * FROM log;

Replicated directories as load paths

In this section we’ll describe how to configure Hive to use fusion URIs as input paths for loading data.

It is not common to load data into a Hive table from a file using the fusion URI. When loading data into Hive from files the core-site.xml setting fs.default.name must also be set to fusion, which may not be desirable. It is much more common to load data from a local file using the LOCAL keyword:

LOAD DATA LOCAL INPATH '/tmp/log.csv' INTO TABLE log;

If you do wish to use a fusion URI as a load path, you must change the fs.defaultFS setting to use WANdisco Fusion, as noted in a previous section. Then you may run:

LOAD DATA INPATH 'fusion:///repl1/log.csv' INTO TABLE log;

Sharing the Hive metastore

Advanced configuration - please contact WANdisco before attempting

In this section we’ll describe how to share the Hive metastore between two clusters.

Since WANdisco Fusion can replicate the file system that contains the Hive data storage, sharing the metadata presents a single logical view of Hive to users on both clusters.

When sharing the Hive metastore, note that Hive users on all clusters will know about all tables. If a table is not actually replicated, Hive users on other clusters will experience errors if they try to access that table.

There are two options available.

Hive metastore available read-only on other clusters

In this configuration, the Hive metastore is configured normally on one cluster. On other clusters, the metastore process points to a read-only copy of the metastore database. MySQL can be used in master-slave replication mode to provide the metastore.

Hive metastore writable on all clusters

In this configuration, the Hive metastore is writable on all clusters.

-

Configure the Hive metastore to support high availability.

-

Place the standby Hive metastore in the second data center.

-

Configure both Hive services to use the active Hive metastore.

|

Performance over WAN

Performance of Hive metastore updates may suffer if the writes are routed over the WAN.

Hive metastore replication

There are three strategies for replicating Hive metastore data with WANdisco Fusion:

|

Standard

For Cloudera CDH: See Hive Metastore High Availability.

For Hortonworks/Ambari: High Availability for Hive Metastore.

Manual Replication

In order to manually replicate metastore data ensure that the DDLs are placed on two clusters, and perform a partitions rescan.

3.4.3. Impala

Prerequisites

-

Knowledge of Impala architecture.

-

Ability to modify Hadoop site configuration.

-

WANdisco Fusion installed and operating.

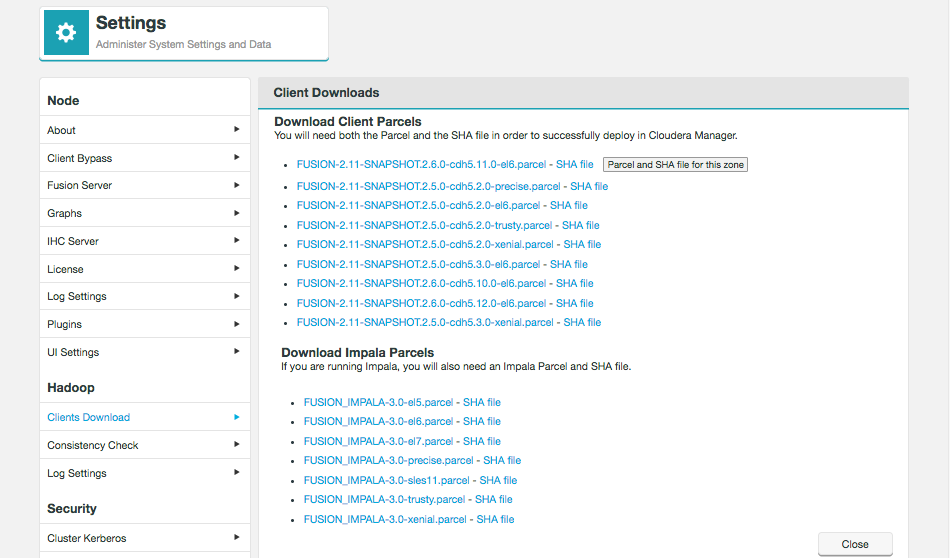

Impala Parcel

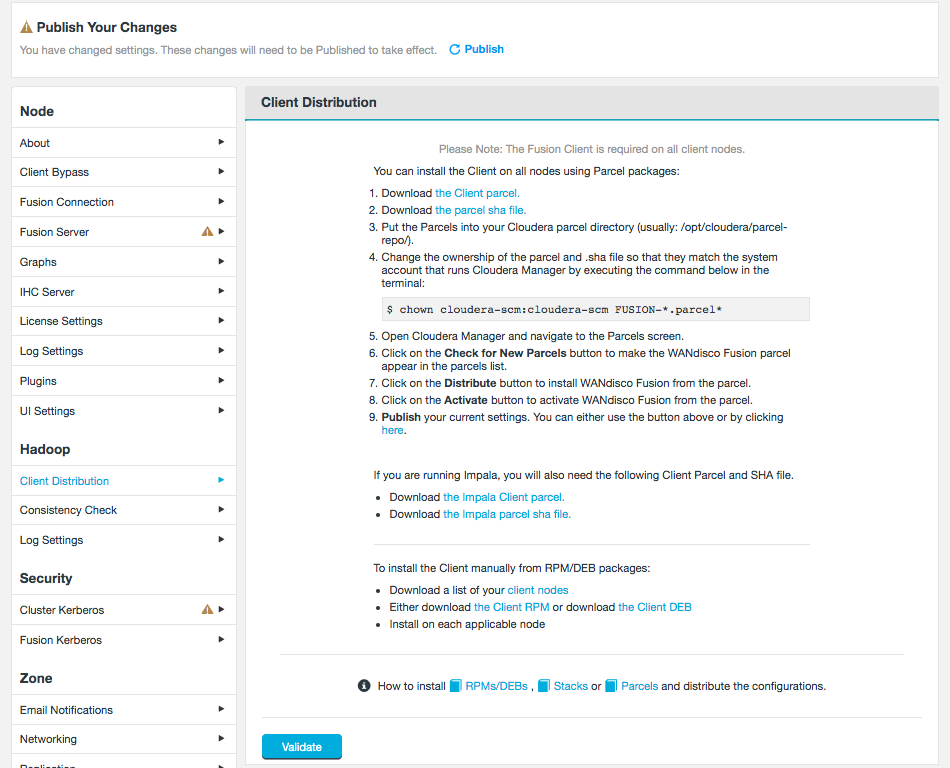

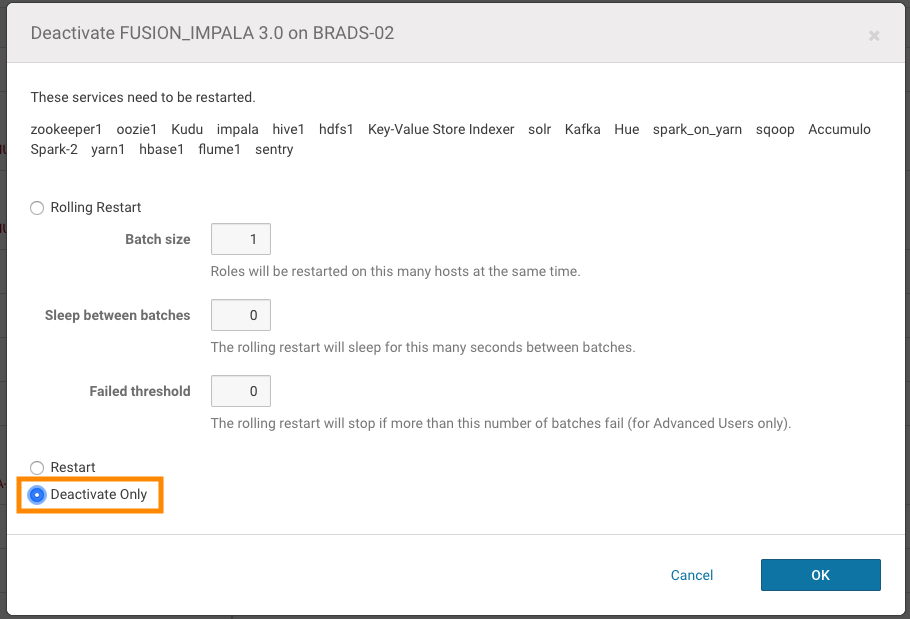

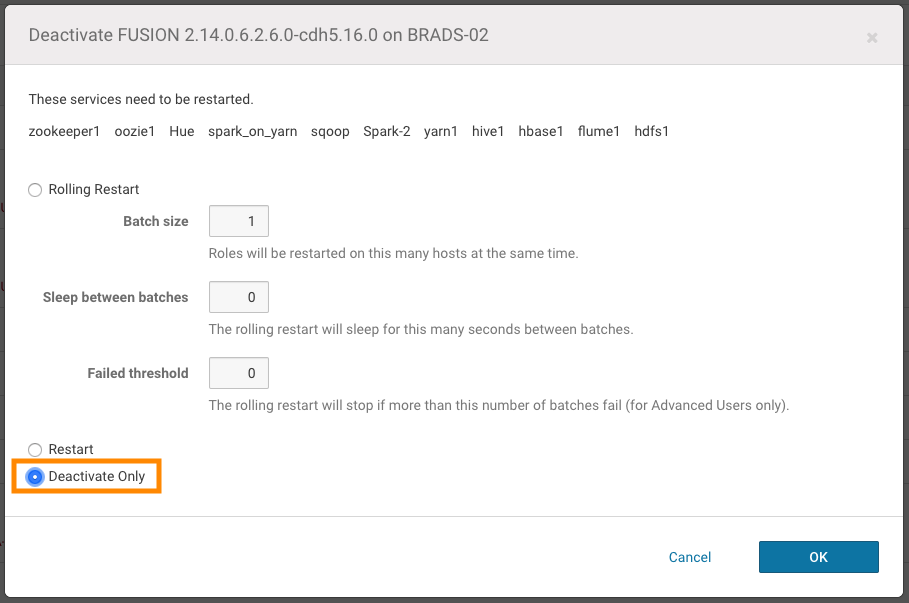

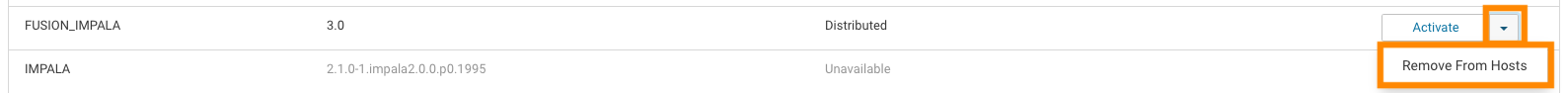

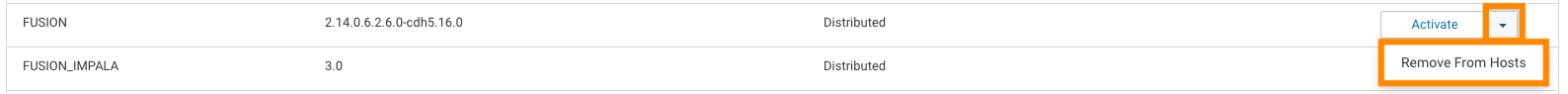

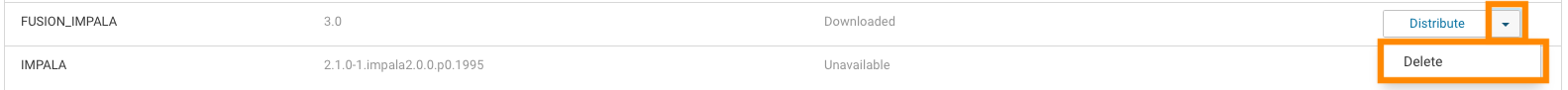

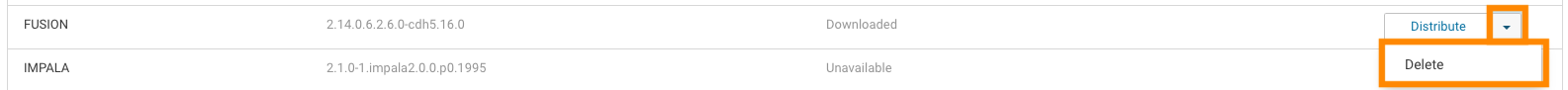

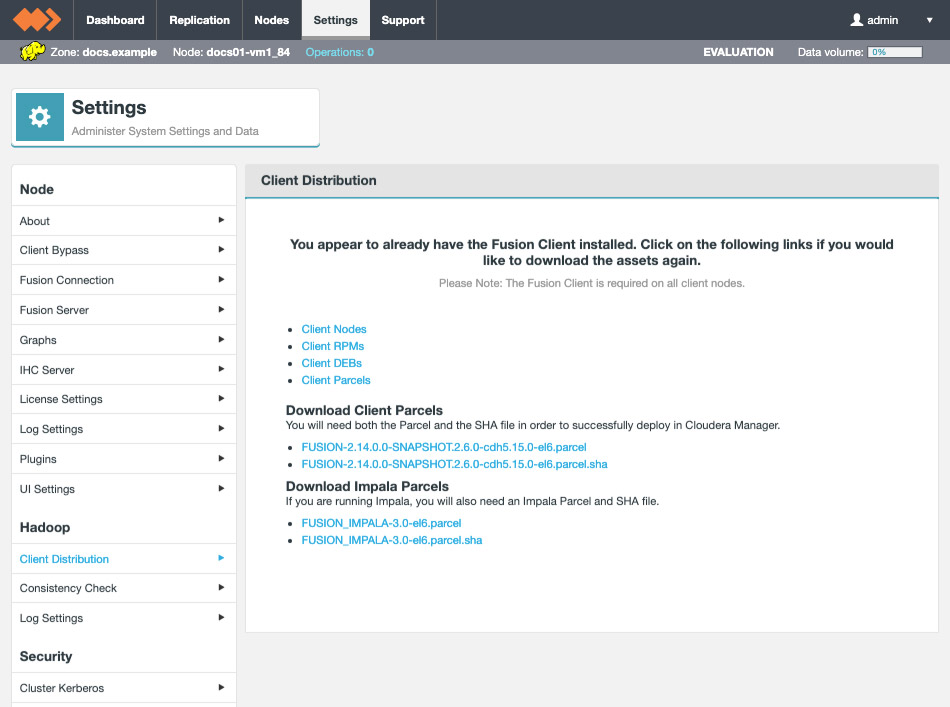

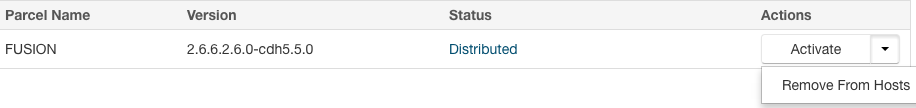

If you plan to use WANdisco Fusion’s own fusion:/// URI, then you will need to use the provided parcel (see the screenshot, below for link in the Client Download section of the Settings screen):

Follow the same steps described for installing the WANdisco Fusion client, downloading the parcel and SHA file, i.e.:

-

Have cluster with CDH installed with parcels and Impala.

-

Copy the

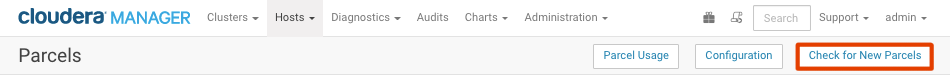

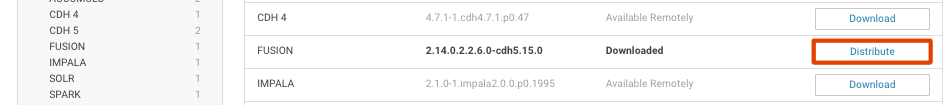

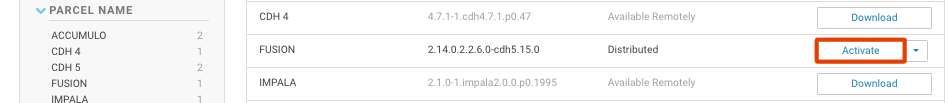

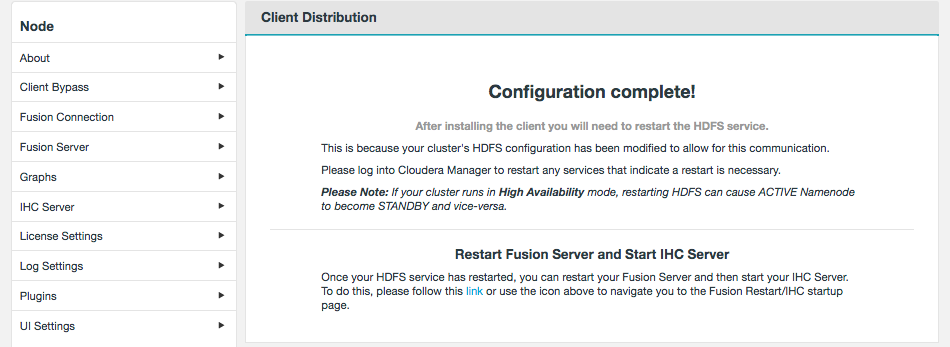

FUSION_IMPALAparcel and SHA into the local parcels repository, on the same node where Cloudera Manager Services is installed, this need not be the same location where the Cloudera Manager Server is installed. The default location is at: /opt/cloudera/parcel-repo, but is configurable. In Cloudera Manager, you can go to the Parcels Management Page → Edit Settings to find the Local Parcel Repository Path. See Parcel Locations.FUSION_IMPALA should be available to distribute and activate on the Parcels Management Page, remember to click Check for New Parcels button.

-

Once installed, restart the cluster.

-

Impala reads on Fusion files should now be available.

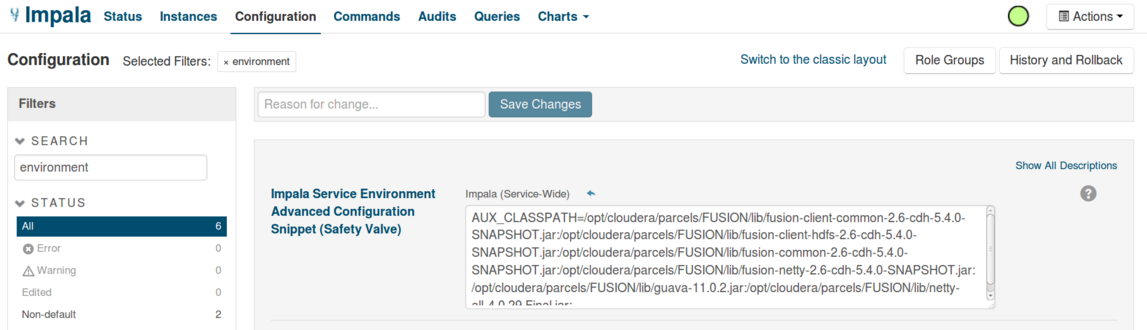

Setting the CLASSPATH

In order for Impala to load the Fusion Client jars, the user needs to make a small configuration change in their Impala service, through Cloudera Manager. In Cloudera Manager, the user needs to add an environment variable in the section Impala Service Environment Advanced Configuration Snippet (Safety Valve).

AUX_CLASSPATH='colon-delimited list of all the Fusion client jars'

The following command gives an example of how to do this.

echo "AUX_CLASSPATH=$((for i in /opt/cloudera/parcels/FUSION/lib/*.jar; do echo -n "${i}:"; done) | sed 's/\:$//g')"

3.4.4. Presto

Presto Interoperability

Presto is an open source distributed SQL query engine for running interactive analytic queries. It can query and interact with multiple data sources, and can be extended with plugins.

Presto requires the use of Java 8 and has internal dependencies on Java library versions that may conflict with those of the Hadoop distribution with which it communicates when using the “hive-hadoop2” plugin.

Presto and Fusion

WANdisco Fusion leverages a replacement client library when overriding the hdfs:// scheme for access to the cluster file system in order to coordinate that access among multiple clusters.

This replacement library is provided in a collection of jar files in the /opt/wandisco/fusion/client/lib directory for a standard installation.

These jar files need to be available to any process that accesses the file system using the com.wandisco.fs.client.FusionHdfs implementation of the Apache Hadoop FileSystem API.

Because Presto requires these classes to be available to the hive-hadoop2 plugin, they must reside in the plugin/hive-hadoop2 directory of the Presto installation.

Using the Fusion Client Library with Presto

-

Copy the JAR files in the plugin/hive-hadoop2 directory of each Presto server.

-

Restart the Presto coordinators.

It is also important to confirm that the Presto configuration includes the necessary properties to function correctly with the hive-hadoop2 plugin.

The specific values below will need to be adjusted for the actual environment, including references to the WANdisco replicated metastore, the HDP cluster configuration that includes Fusion configuration, and Kerberos-specific information to allow Presto to interoperate with a secured cluster.

connector.name=hive-hadoop2 hive.metastore.uri=thrift://presto02-vm1.test.server.com:9084 hive.config.resources=/etc/hadoop/conf/core-site.xml,/etc/hadoop/conf/hdfs-site.xml hive.metastore.authentication.type=KERBEROS hive.metastore.service.principal=hive/presto02-vm1.test.server.com@WANDISCO.HADOOP hive.metastore.client.principal=presto/presto02-vm0.test.server.com@WANDISCO.HADOOP hive.metastore.client.keytab=/etc/security/keytabs/presto.keytab hive.hdfs.authentication.type=KERBEROS hive.hdfs.impersonation.enabled=true hive.hdfs.presto.principal=hdfs-presto2@WANDISCO.HADOOP hive.hdfs.presto.keytab=/etc/security/keytabs/hdfs.headless.keytab

Keytabs and principals will need to be configured correctly, and as the hive-hadoop2 Presto plugin uses YARN for operation, the /user/yarn directory must exist and be writable by the yarn user in all clusters in which Fusion operates.

Known Issue

Presto embeds Hadoop configuration defaults into the hive-hadoop2 plugin, including a core-default.xml file that specifies the following property entry:

<property> <name>hadoop.security.authentication</name> <value>simple</value> <description>Possible values are simple (no authentication), and kerberos </description> </property>

Although Presto allows the hive-hadoop2 plugin to use additional configuration properties by adding entries like the following in a .properties file in the etc/catalog directory:

hive.config.resources=/etc/hadoop/conf/core-site.xml,/etc/hadoop/conf/hdfs-site.xml

This entry allows extra configuration properties to be loaded from a standard Hadoop configuration file, but those entries cannot override settings that are embedded in the core-default.xml that ships with the Presto hive-hadoop2 plugin.

In a kerberized implementation the Fusion client library relies on the ability to read the hadoop.security.authentication configuration property to determine if it should perform a secure handshake with the Fusion server. Without that property defined, the client and server will fail to perform their security handshake, and Presto queries will not succeed.

Workaround

The solution to this issue is to update the core-default.xml file contained in the hive-hadoop2 plugin:

$ mkdir ~/tmp $ cd ~/tmp $ jar -xvf <path to…>/presto-server-0.164/plugin/hive-hadoop2/hadoop-apache2-0.10.jar

Edit the core-default.xml file to update the hadoop.security.authentication property so that its value is “kerberos”

$ Jar -uf <path to...>/presto-server-0.164/plugin/hive-hadoop2/hadoop-apache2-0.10.jar core-default.xml

Distribute the hadoop-apache2-0.10.jar to all Presto nodes, and restart the Presto coordinator.

3.4.5. Oozie

The Oozie service can function with Fusion, running without problem with Cloudera CDH. Under Hortonworks HDP you need to apply the following procedure, after completing the WANdisco Fusion installation:

-

Open a terminal to the node with root privileges.

-

If Fusion was previously installed and has now been removed, check that any dead symlinks have been removed.

cd /usr/hdp/current/oozie-server/libext ls -l rm [broken symlinks]

-

Create the symlinks for fusion client jars.

ln -s /opt/wandisco/fusion.client/lib/* /usr/hdp/current/oozie-server/libext

-

In Ambari, stop the Oozie Server service.

-

Open a terminal session as user oozie and run:

/usr/hdp/current/oozie-server/bin/oozie-setup.sh prepare-war

-

In Ambari, start the Oozie Server service.

It is worth noting that the new symlinks get created, but if previous symlinks have not been manually removed first, the war packaging which happens when oozie server is started will fail, causing the oozie server startup to fail.

You need to ensure old symlinks in

/usr/hdp/current/oozie-server/libextare removed before we install the new client stack.

Oozie installation changes

Something to be aware of in Hyper Scale-Out Platform (HSP) installations - when you install the client stack, the fusion-client RPM creates symlinks in /usr/hdp/current/oozie-server/libext for the client jars. However, these get left behind if the client stack/RPM are removed.

If a new version of fusion-client is installed, Oozie server will refuse to start because of the broken symlinks.

A change in behavior

Installing clients via RPM/Deb packages no longer automatically stop and repackage Oozie. If Oozie was running prior to the client installation, you will need to manually stop Oozie, then Oozie setup command -

oozie-setup.sh prepare-war

If possible, complete these actions through Ambari.

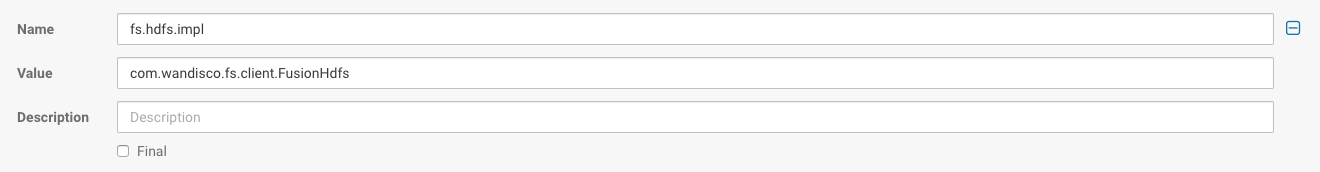

If Oozie is installed after WANdisco Fusion

In this case, the symlinks necessary for the jar archive files will not have been created. Under Ambari, using the "Refresh configs" service action on the WANdisco Fusion service should trigger re-linking and the prepare-war process.

If not installed directly via RPM/Deb packages, you should use the manual process for reinstalling the package, followed by the same steps noted above to stop and restart Oozie, using the setup script.

3.4.6. Oracle: Big Data Appliance

Each node in an Oracle:BDA deployment has multiple network interfaces, with at least one used for intra-rack communications and one used for external communications. WANdisco Fusion requires external communications so configuration using the public IP address is required instead of using host names.

Prerequisites

-

Knowledge of Oracle:BDA architecture and configuration.

-

Ability to modify Hadoop site configuration.

Required steps

-

Configure WANdisco Fusion to support Kerberos. See Setting up Kerberos

-

Configure WANdisco Fusion to work with NameNode High Availability described in Oracle’s documentation

-

Restart the cluster, WANdisco Fusion and IHC processes. See init.d management script

-

Test that replication between zones is working.

3.4.7. Apache Livy

There’s an issue with running Apache Livy. As a Spark1 application, it does not use the standard Hadoop classpath, but also does not use the Spark Assembly. Livy may fail to start with FusionHdfs class not found.

Based on the current active version of HDP/Livy, you can resolve this with the following symlink.

ln -s /opt/wandisco/fusion/client/lib/* /usr/hdp/current/livy-server/jars/

3.4.8. Apache Tez

Apache Tez is a YARN application framework that supports high performance data processing through DAGs. When set up, Tez uses its own tez.tar.gz containing the dependencies and libraries that it needs to run DAGs.

Tez with Hive

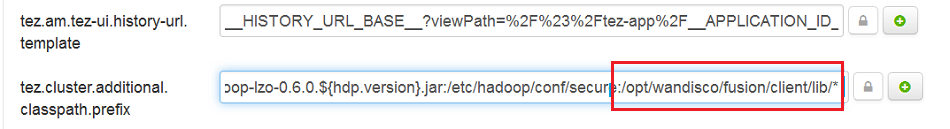

In order to make Hive with Tez work, you need to append the Fusion jar files in tez.cluster.additional.classpath.prefix under the Advanced tez-site section:

tez.cluster.additional.classpath.prefix = /opt/wandisco/fusion/client/lib/*

e.g. WANdisco Fusion tree

Running Hortonworks Data Platform, the tez.lib.uris parameter defaults to /hdp/apps/${hdp.version}/tez/tez.tar.gz. So, to add Fusion libs, there are three options.

| Fusion installer users Option 1 |

Option 1: Delete the tez.lib.uris path, e.g. "/hdp/apps/${hdp.version}/tez/tez.tar.gz". Instead, use a list including the path where the tez.tar.gz file will unpack, and the path where Fusion libs are located.

Option 2: Or unpack tez.tar.gz, repack with WANdisco Fusion libs and re-upload to HDFS.

Option 3:

Alternatively, you may set the tez.lib.uris property with the path to the WANdisco Fusion client jar files, e.g.

<property>

<name>tez.lib.uris</name>

# Location of the Tez jars and their dependencies.

# Tez applications download required jar files from this location, so it should be public accessible.

<value>${fs.default.name}/apps/tez/,${fs.default.name}/apps/tez/lib/</value>

</property>

| All these methods are vulnerable to a platform (HDP) upgrade. |

3.4.9. Tez / Hive2 with LLAP

The following configuration changes are needed when running Tez with Low Latency Analytical Processing functionality.

Tez Overview

You can read about the results of testing Hive2 with LLAP - Low Latency Analytical Processing, using Apache Slider to run Tez Application Masters on YARN. Inevitably, running a Tez query through this interface results in a FusionHDFS class not found.

The following steps show an example remedy, through the bundling of the client jars into the tez.lib.uris tar.gz.

|

Verified on HDP 2.6.2

The following example is tested on HDP 2.6.2. The procedure may alter on different platforms.

|

-

First, extract existing Tez library to a local directory.

# mkdir /tmp/tezdir # cd /tmp/tezdir # cp /usr/hdp/2.6*/tez_hive2/lib/tez.tar.gz . # tar xvzf tez.tar.gz

-

Add the Fusion client jars to the same extracted location.

# cp /opt/wandisco/fusion/client/lib/* .

-

Re-package the Tez library including the Fusion jars.

# tar cvzf tez.tar.gz *

-

Upload the enlarged Tez library to HDFS (taking a backup of original).

# hdfs dfs -cp /hdp/apps/<your-hdp-version>/tez_hive2/tez.tar.gz /user/<username>/tez.tar.gz.pre-WANdisco # hdfs dfs -put tez.tar.gz /hdp/apps/<your-hdp-version>/tez_hive2/

Note The <your-hdp-version> component of the path name needs to match the point release of HDP you are using. This should be in the form 2.major.minor.release-build id e.g.

/hdp/apps/2.6.3.0-235/tez_hive2 -

Restart LLAP service through Ambari.

3.4.10. Apache Ranger

Apache Ranger is another centralized security console for Hadoop clusters, a preferred solution for Hortonworks HDP (whereas Cloudera prefers Apache Sentry). While Apache Sentry stores its policy file in HDFS, Ranger uses its own local MySQL database, which introduces concerns over non-replicated security policies.

Ranger also applies its policies to the ecosystem via java plugins into the ecosystem components - the namenode, hiveserver etc. In testing, the WANdisco Fusion client has not experienced any problems communicating with Apache Ranger-enabled platforms (Ranger+HDFS).

3.4.11. Apache Kafka

Apache Kafka is a distributed publish-subscribe messaging system. Now part of the Apache project, Kafka is fast, scalable and by its nature, distributed, either across multiple servers, clusters or even data centers. See Apache Kafka.

|

Known problem

When Ranger auditing is enabled for Kafka, the audit logging data spools on local disk because the write to HDFS fails. The failure is caused by a "no class found" issue with the Fusion client. A typical error message if you added the Fusion client jars location to the CLASSPATH:

|

java.lang.ClassCastException: com.wandisco.fs.client.FusionHdfs cannot be cast to org.apache.hadoop.fs.FileSystem error.

workaround

In order to override the fs.hdfs.impl configuration in core-site.xml, all that we need to do is to add a custom property in Custom ranger-kafka-audit under Kafka Config in Ambari.

-

Ambari → Kafka → Configs

-

Expand Custom ranger-kafka-audit

-

Add the following property:

xasecure.audit.destination.hdfs.config.fs.hdfs.impl=org.apache.hadoop.hdfs.DistributedFileSystem

-

Save the changes.

3.4.12. Solr

Apache Solr is a scalable search engine that can be used with HDFS.

In this section we cover what you need to do for Solr to work with a WANdisco Fusion deployment.

Note: Solr only comes with CDH and IOP 4.2 and greater.

-

WANdisco Fusion is unable to support Solr on CDH 6. Read Known Issue: WD-FUS-6404 for more information.

-

For information on how to use Solr with HDP, read the Knowledge base article Solr support for HDP distributions.

Minimal deployment using the default hdfs:// URI

Getting set up with the default URI is simple, Solr just needs to be able to find the fusion client jar files that contain the FusionHdfs class.

-

Copy the Fusion/Netty jars into the classpath. Please follow these steps on all deployed Solr servers. For CDH5.4 with parcels, use these two commands:

cp /opt/cloudera/parcels/FUSION/lib/fusion* /opt/cloudera/parcels/CDH/lib/solr/webapps/solr/WEB-INF/lib cp /opt/cloudera/parcels/FUSION/lib/netty-all-*.Final.jar /opt/cloudera/parcels/CDH/lib/solr/webapps/solr/WEB-INF/lib cp /opt/cloudera/parcels/FUSION/lib/wd-guava-15.0.jar /opt/cloudera/parcels/CDH/lib/solr/webapps/solr/WEB-INF/lib cp /opt/cloudera/parcels/FUSION/lib/bcprov-jdk15on-1.54.jar /opt/cloudera/parcels/CDH/lib/solr/webapps/solr/WEB-INF/lib

-

Restart all Solr Servers.

-

Solr is now successfully configured to work with WANdisco Fusion.

Minimal deployment using the WANdisco "fusion://" URI

This is a minimal working solution with Solr on top of fusion.

Requirements

Solr will use a shared replicated directory.

-

Symlink the WANdisco Fusion jars into Solr webapp.

cd /opt/cloudera/parcels/CDH/lib/solr/webapps/solr/WEB-INF/lib ln -s /opt/cloudera/parcels/FUSION/lib/fusion* . ln -s /opt/cloudera/parcels/FUSION/lib/netty-all-4* . ln -s /opt/cloudera/parcels/FUSION/lib/bcprov-jdk15on-1.52 .

-

Restart Solr.

-

Create instance configuration.

$ solrctl instancedir --generate conf1

-

Edit conf1/conf/solrconfig.xml and replace

solr.hdfs.homein directoryFactory definition with actual fusion:/// uri, like fusion:///repl1/solr -

Create solr directory and set solr:solr permissions on it.

$ sudo -u hdfs hdfs dfs -mkdir fusion:///repl1/solr $ sudo -u hdfs hdfs dfs -chown solr:solr fusion:///repl1/solr

-

Upload configuration to zk.

$ solrctl instancedir --create conf1 conf1

-

Create collection on first cluster.

$ solrctl collection --create col1 -c conf1 -s 3

|

Tip

For Cloudera, fs.hdfs.impl.disable.cache = true should be set for Solr servers. (don’t set this options cluster-wide, that will stall the WANdisco Fusion server with an unbounded number of client connections).

|

3.4.13. Flume

This set of instructions will set up Flume to ingest data via the fusion:///` URI.

Edit the configuration, set "agent.sources.flumeSource.command" to the path of the source data.

Set “agent.sinks.flumeHDFS.hdfs.path” to the replicated directory of one of the DCs. Make sure it begins with fusion:/// to push the files to Fusion and not hdfs.

Prerequisites

-

Create a user in both the clusters

'useradd -G hadoop <username>' -

Create user directory in hadoop fs

'hadoop fs -mkdir /user/<username>' -

Create replication directory in both DC’s

'hadoop fs -mkdir /fus-repl' -

Set permission to replication directory

'hadoop fs -chown username:hadoop /fus-repl' -

Install and configure WANdisco Fusion.

Setting up Flume through Cloudera Manager

If you want to set up Flume through Cloudera Manager follow these steps:

-

Download the client in the form of a parcel and the parcel.sha through the UI.

-

Put the parcel and .sha into /opt/cloudera/parcel-repo on the Cloudera Managed node.

-

Go to the UI on the Cloudera Manager node. On the main page, click the small button that looks like a gift wrapped. box and the FUSION parcel should appear (if it doesn’t, try clicking Check for new parcels and wait a moment).

-

Install, distribute, and activate the parcel.

-

Repeat steps 1-4 for the second zone.

-

Make sure replicated rules are created for sharing between Zones.

-

Go onto Cloudera Manager’s UI on one of the zones and click Add Service.

-

Select the Flume Service. Install the service on any of the nodes.

-

Once installed, go to Flume→Configurations.

-

Set 'System User' to 'hdfs'

-

Set 'Agent Name' to 'agent'

-

Set 'Configuration File' to the contents of the flume.conf configuration.

-

Restart Flume Service.

-

Selected data should now be in Zone1 and replicated in Zone2

-

To check data was replicated, open a terminal onto one of the DCs and become

hdfsuser, e.g.su hdfs, and run.hadoop fs -ls /repl1/flume_out"

-

On both Zones, there should be the same FlumeData file with a long number. This file will contain the contents of the source(s) you chose in your configuration file.

3.4.14. Spark1

It’s possible to deploy WANdisco Fusion with Apache’s high-speed data processing engine. Note that prior to version 2.9.1 you needed to manually add the SPARK_CLASSPATH.

Spark with CDH

There is a known issue where Spark is not picking up hive-site.xml, So that Hadoop configuration is not localised when submitting job in yarn-cluster mode (Fixed in version Spark 1.4).

You need to manually add it in by either:

-

Copy /etc/hive/conf/hive-site.xml into /etc/spark/conf.

or -

Do one of the following, depending on which deployment mode you are running in:

- Client

-

set HADOOP_CONF_DIR to /etc/hive/conf/ (or the directory where hive-site.xml is located).

- Cluster

-

add --files=/etc/hive/conf/hive-site.xml (or the path for hive-site.xml) to the spark-submit script.

-

Deploy configs and restart services.

|

Using the FusionUri

The fusion:/// URI has a known issue where it complains about "Wrong fs". For now Spark is only verified with FusionHdfs going through the hdfs:/// URI.

|

Fusion Spark Interoperability

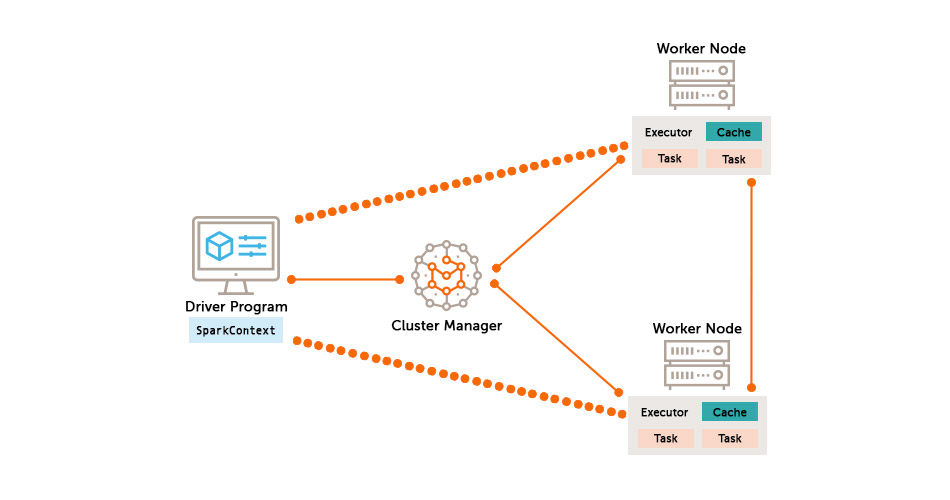

Spark applications are run on a cluster as independent sets of processes, coordinated by the SparkContext object in the driver program. To run on a cluster, the SparkContext can connect to several types of cluster managers (either Spark’s own standalone cluster manager, Mesos or YARN), which allocate resources across applications. Once connected, Spark acquires executors on nodes in the cluster, which are processes that run computations and store data for your application. Next, it sends your application code (defined by JAR or Python files passed to SparkContext) to the executors. Finally, SparkContext sends tasks to the executors to run.

Spark and Fusion

WANdisco Fusion uses a replacement client library when overriding the hdfs:// scheme for access to the cluster file system in order to coordinate that access among multiple clusters. This replacement library is provided in a collection of jar files in the /opt/wandisco/fusion/client/lib directory for a standard installation. These jar files need to be available to any process that accesses the file system using the com.wandisco.fs.client.FusionHdfs implementation of the Apache Hadoop File System API.

Because Spark does not provide a configurable mechanism for making the Fusion classes available to the Spark history server, the Spark Executor or Spark Driver programs, WANdisco Fusion client library classes need to be made available in the existing Spark assembly jar that holds the classes used by these Spark components. This requires updating that assembly jar to incorporate the Fusion client library classes.

Updating the Spark Assembly JAR

This is one of a number of methods that may be employed to provide Fusion-Spark integration. We hope to cover some alternate methods at a later date.

Hortonworks HDP

-

First, make a backup of the original Spark assembly jar:

$ cp /usr/hdp/<version>/spark/lib/spark-assembly-<version>-hadoop<version>.jar /usr/hdp/<version>/spark/lib/spark-assembly-<version>-hadoop<version>.jar.original

Then follow this process to update the Spark assembly jar.

$ mkdir /tmp/spark_assembly $ cd /tmp/spark_assembly $ jar -xf /opt/wandisco/fusion/client/lib/bcprov-jdk15on.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-adk-common.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-adk-netty.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-client-common.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-common.jar $ jar -xf /opt/wandisco/fusion/client/lib/wd-guava.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-adk-client.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-adk-hadoop.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-adk-security.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-client-hdfs.jar $ jar -xf /opt/wandisco/fusion/client/lib/fusion-messaging-core.jar $ jar -xf /opt/wandisco/fusion/client/lib/wd-netty-all.jar jar -uf /usr/hdp/<version>/spark/lib/spark-assembly-<version>-hadoop<version>.jar com/** org/** META-INF/**

-

You now have both the original Spark assembly jar (with the extension “.original”) and a version with the Fusion client libraries available in it. The updated version needs to be made available on each node in the cluster in the /usr/hdp/<version>/spark/lib directory.

-

If you need to revert to the original Spark assembly jar, simply copy it back in place on each node in the cluster.

Cloudera CDH

The procedure for Cloudera CDH is much the same as the one for HDP, provided above. Note that path differences:

-

First, make a backup of the original Spark assembly jar:

$ cp /opt/cloudera/parcels/CDH-<version>.cdh<version>/jars/spark-assembly-<version>-cdh<version>-hadoop<version>-cdh<version>.jar /opt/cloudera/parcels/CDH-<version>.cdh<version>/jars/spark-assembly-<version>-cdh<version>-hadoop<version>-cdh<version>.jar.original

Then follow this process to update the Spark assembly jar.

$ mkdir /tmp/spark_assembly $ cd /tmp/spark_assembly jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/bcprov-jdk15on-1.54.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-adk-common.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-adk-netty.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-client-common.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-common.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/wd-guava.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-adk-client.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-adk-hadoop.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-adk-security.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-client-hdfs.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/fusion-messaging-core.jar jar -xf /opt/cloudera/parcels/FUSION-<fusion-version>-cdh<version>/lib/wd-netty-all.jar jar -uf /opt/cloudera/parcels/CDH-<version>.cdh<version>/jars/spark-assembly-<version>-cdh<version>-hadoop<version>-cdh<version>.jar com/** org/** META-INF/**

-

You now have both the original Spark assembly jar (with the extension “.original”) and a version with the Fusion client libraries available in it. The updated version needs to be made available on each node in the cluster in the /opt/cloudera/parcels/CDH-5.9.1-1.cdh5.9.1.p0.4/jars/ directory.

-

If you need to revert to the original Spark assembly jar, simply copy it back in place on each node in the cluster.

Spark Assembly Upgrade

The following example covers how you may upgrade the Spark Assembly as part of a Fusion upgrade. This example uses CDH 5.11, although it can be applied generically:

# Create staging path for client and spark assembly

mkdir -p /tmp/spark_assembly/assembly

# Copy existing Spark assembly to work on

cp /opt/cloudera/parcels/CDH/jars/spark-assembly-*.jar /tmp/spark_assembly/assembly/

# Collect file list for purging, sanitise the list as follows

# * List jar files. Do not list symlinks

# * Exclude directory entries which end with a '/'

# * Sort the list

# * Ensure output is unique

# * Store in file

find /opt/cloudera/parcels/FUSION/lib -name '*.jar' -type f -exec jar tf {} \; | grep -Ev '/$' | sort | uniq > /tmp/spark_assembly/old_client_classes.txt

# Purge assembly copy

xargs zip -d /tmp/spark_assembly/assembly/spark-assembly-*.jar < /tmp/spark_assembly/old_client_classes.txt

The resulting spark-assembly is now purged and requires one of two actions:

-

If WANdisco Fusion is being removed, distribute the new assembly to all hosts.

-

If Fusion is being upgraded, retain this jar for the moment and use it within the assembly packaging process for the new client.

3.4.15. Spark 2

Spark 2 comes with significant performance improvements at the cost of incompatibility with Spark (1). The installation of Spark 2 is more straight forward but there is one known issue concerning the need to restart the Spark 2 service during a silent installation. Without a restart, configuration changes will not be picked up.

|

Spark 2 on HDP 3.x

If you are using HDP 3.x, an additional step is required after deploying Fusion, before running any Spark jobs.

|

Manual symlink

If Spark 2 is installed after WANdisco Fusion you will need to manually symlink the WANdisco Fusion client libraries.

For HDP, create the 3 symlinks as follows:

ln -s /opt/wandisco/fusion/client/lib/* /usr/hdp/current/spark2-client/jars ln -s /opt/wandisco/fusion/client/lib/* /usr/hdp/current/spark2-historyserver/jars ln -s /opt/wandisco/fusion/client/lib/* /usr/hdp/current/spark2-thriftserver/jars

Cloudera will automatically handle the creation of symlinks for managed clusters. However if you are using unmanaged clusters you will need to create the symlinks using the following command:

ln -s /opt/wandisco/fusion/client/lib/* /opt/cloudera/parcels/SPARK2/lib/spark2/jars/

3.4.16. HBase (Cold Back-up mode)

It’s possible to run HBase in a cold-back-up mode across multiple data centers using WANdisco Fusion, so that in the event of the active HBase node going down, you can bring up the HBase cluster in another data centre, etc. However, there will be unavoidable and considerable inconsistency between the lost node and the awakened replica. The following procedure should make it possible to overcome corruption problems enough to start running HBase again, however, since the damage dealt to underlying filesystem might be arbitrary, it’s impossible to account for all possible corruptions.

Requirements

For HBase to run with WANdisco Fusion, the following directories need to be created and permissioned, as shown below:

platform |

path |

|---|---|

permission |

CDH5.x |

/user/hbase |

hbase:hbase |

HDP2.x |

/hbase

/user/hbase |

|

Known problem: permissions error blocks HBase repair.

Error example: 2016-09-22 17:14:43,617 WARN [main] util.HBaseFsck: Got AccessControlException when preCheckPermission

org.apache.hadoop.security.AccessControlException: Permission denied: action=WRITE path=hdfs://supp16-vm0.supp:8020/apps/hbase/data/.fusion user=hbase

at org.apache.hadoop.hbase.util.FSUtils.checkAccess(FSUtils.java:1685)

at org.apache.hadoop.hbase.util.HBaseFsck.preCheckPermission(HBaseFsck.java:1606)

at org.apache.hadoop.hbase.util.HBaseFsck.exec(HBaseFsck.java:4223)

at org.apache.hadoop.hbase.util.HBaseFsck$HBaseFsckTool.run(HBaseFsck.java:4063)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

You can configure the root path for all .fusion directories associated with Deterministic State Machines (DSMs). Customizable DSM token directories

These can be set in the respective configurations to change the location of the .fusion directory. It is important to note that the configuration and same path must be added to all fusion servers in all zones if used. |

Procedure

The steps below provide a method of handling a recovery using a cold back-up. Note that multiple HMaster/region servers restarts might be needed for certain steps, since hbck command generally requires master to be up, which may require fixing filesystem-level inconsistencies first.

-

Delete all recovered.edits directory artifacts from possible log splitting for each table/region. This might not be strictly necessary, but could reduce the numbers of errors observed during startup.

hdfs dfs -rm /apps/hbase/data/data/default/TestTable/8fdee4924ac36e3f3fa430a68b403889/recovered.edits

-

Detect and clean up (quarantine) all corrupted HFiles in all tables (including system tables - hbase:meta and hbase:namespace). Sideline option forces hbck to move corrupted HFiles to a special .corrupted directory, which could be examined/cleanup up by admins:

hbase hbck -checkCorruptHFiles -sidelineCorruptHFiles

-

Attempt to rebuild corrupted table descriptors based on filesystem information:

hbase hbck -fixTableOrphans

-

General recovery step - try to fix assignments, possible region overlaps and region holes in HDFS - just in case:

hbase hbck -repair

-

Clean up ZK. This is particularly necessary if hbase:meta or hbase:namespace were messed up (note that exact name of ZK znode is set by cluster admin).

hbase zkcli rmr /hbase-unsecure

Final step to correct metadata-related errors.

hbase hbck -metaonly hbase hbck -fixMeta

3.4.17. Apache Phoenix

The Phoenix Query Server provides an alternative means for interaction with Phoenix and HBase. When WANdisco Fusion is installed, the Phoenix query server may fail to start. The following workaround will get it running with Fusion.

-

Open up phoenix_utils.py, comment out.

#phoenix_class_path = os.getenv('PHOENIX_LIB_DIR','')and set WANdisco Fusion’s classpath instead (using the client jar file as a colon separated string). e.g.

def setPath(): PHOENIX_CLIENT_JAR_PATTERN = "phoenix-*-client.jar" PHOENIX_THIN_CLIENT_JAR_PATTERN = "phoenix-*-thin-client.jar" PHOENIX_QUERYSERVER_JAR_PATTERN = "phoenix-server-*-runnable.jar" PHOENIX_TESTS_JAR_PATTERN = "phoenix-core-*-tests*.jar" # Backward support old env variable PHOENIX_LIB_DIR replaced by PHOENIX_CLASS_PATH global phoenix_class_path #phoenix_class_path = os.getenv('PHOENIX_LIB_DIR','') phoenix_class_path = "/opt/wandisco/fusion/client/lib/fusion-client-hdfs-2.6.7-hdp-2.3.0.jar:/opt/wandisco/fusion/client/lib/fusion-client-common-2.6.7-hdp-2.3.0.jar:/opt/wandisco/fusion/client/lib/fusion-netty-2.6.7-hdp-2.3.0.jar:/opt/wandisco/fusion/client/lib/netty-all-4.0.23.Final.jar:/opt/wandisco/fusion/client/lib/guava-11.0.2.jar:/opt/wandisco/fusion/client/lib/fusion-common-2.6.7-hdp-2.3.0.jar" if phoenix_class_path == "": phoenix_class_path = os.getenv('PHOENIX_CLASS_PATH','') -

Edit: queryserver.py, change the Java construction command to look like the one below by appending the phoenix_class_path to it within the "else" portion of java_home :

if java_home:

java = os.path.join(java_home, 'bin', 'java')

else:

java = 'java'

# " -Xdebug -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=n " + \

# " -XX:+UnlockCommercialFeatures -XX:+FlightRecorder -XX:FlightRecorderOptions=defaultrecording=true,dumponexit=true" + \

java_cmd = '%(java)s -cp ' + hbase_config_path + os.pathsep + phoenix_utils.phoenix_queryserver_jar + os.pathsep + phoenix_utils.phoenix_class_path + \

" -Dproc_phoenixserver" + \

" -Dlog4j.configuration=file:" + os.path.join(phoenix_utils.current_dir, "log4j.properties") + \

" -Dpsql.root.logger=%(root_logger)s" + \

" -Dpsql.log.dir=%(log_dir)s" + \

" -Dpsql.log.file=%(log_file)s" + \

" " + opts + \

3.4.18. Running with Apache HAWQ

In order to get Hawq to work with fusion HDFS client libs there needs to be an update made to the pxf classpath. This can be done in Ambari through the "Advanced pxf-public-classpath" setting adding an entry to the client lib path:

/opt/wandisco/fusion/client/lib/*

3.4.19. Apache Slider

Apache Slider is an application that lets you deploy existing distributed applications on an Apache Hadoop YARN cluster, monitor them and make them larger or smaller as desired - even while the application is running. As these applications run within YARN containers, they are isolated from the rest of the cluster, making Slider an ideal mechanism for running applications that are otherwise incompatible with your Hadoop cluster.

WANdisco Fusion supports the use of Slider via the Slider CLI only, not the Ambari Slider View.

3.4.20. KMS / TDE Encryption and Fusion

TDE (Transparent Data Encryption) is available to enhance their data security. TDE uses Hadoop KMS (Key Management Server) and is typically done using Ranger KMS (in Hortonworks / Ambari installs) or Navigator Key Trustee (Cloudera installs).

In simple terms, a security / encryption key or EEK (encrypted encryption key) is used to encrypt the HDFS data that is physical stored to disk. This encryption occurs within the HDFS client, before the data is transported to the datanode.

The key management server (KMS) centrally holds these EEKs in an encrypted format. ACL (access control lists) defines what users/groups are permitted to do with these keys. This includes creating keys, deleting keys, rolling over (re-encrypting the EEK, not changing the EEK itself), obtaining the EEK, listing the key or keys and so on.

Data encrypted in HDFS is split into encrypted zones. This is the act of defining a path (e.g. /data/warehouse/encrypted1) and specifying which EEK is used to to protect this zone (i.e. the key used to encrypt / decrypt the data). A zone is configured with a single key, but different zones can have different keys. Not all of HDFS needs to be encrypted, only the specific zones (and all sub-directories of that zone) an admin defines are.

A user then needs to be granted appropriate ACL access to a get (specifically the "Get Metadata" and "Decrypt EEK" permissions) the EEK needed, to read / write from the zone.

WANdisco Fusion runs as a HDFS user just like any other user. As such, Fusion will need permissions in order to read / write to an encrypted zone.

Fusion may want to write metadata (consistency check, make consistent and other meta operations), tokens or other items for administrative reasons which may fall under an encrypted zone. Depending on configuration and requirements, the make consistent operation itself will be writing data thus needs access.

Additionally, KMS provides its own Proxyuser implementation which is separate to the HDFS proxyusers. Although this works in the same, defining who is permitted to impersonate another user whilst working with EEKs.

To add complication. The "hdfs" user is typically blacklisted from performing the "Decrypt EEK" function by default. The fact "hdfs" is a superuser means they wield great power in the cluster. That does not mean they are superuser in KMS. As "hdfs" is commonly the default user of choice to use to fix things in HDFS (given the simple fact it overrides permissions), it seems wise to prevent such authority to access EEKs by default. Note: Cloudera also seems to blacklist the group "supergroup" which is the group defined as the superusergroup. That is, any users added to "supergroup" become superusers, however they then also automatically get blacklisted from being able to perform EEK operations.

Configuring Fusion

To configure Fusion for access to encrypted zones, two aspects need to be considered:

-

The local user that Fusion runs as in HDFS (after kerberos auth_to_local mapping) must be able to access and decrypt EEKs.

-

Although other users will be performing the requests themselves, the Fusion server will proxy that request. As such, a proxyuser within the KMS configs for the Fusion user must also be provided.

Step-by-step guide

The following items need to be considered within KMS configuration to ensure Fusion has access:

The kms-site configuration (such as Advanced kms-site in Ambari) contains its own auth_to_local type parameter called “hadoop.kms.authentication.kerberos.name.rules”

Ensure that any auth_to_local mapping used for the Fusion principal is also contained here. This can be most easily achieved via simple copy/paste from core-site.xml.

The kms-site configuration (such as Custom kms-site in Ambari) contains proxyuser parameters such as:

hadoop.kms.proxyuser.USERNAME.hosts hadoop.kms.proxyuser.USERNAME.groups hadoop.kms.proxyuser.USERNAME.users

Entries should be created for the local Fusion user (after auth_to_local translation) to allow Fusion to proxy/impersonate other users requests. This could be as simple as.

hadoop.kms.proxyuser.USERNAME.hosts=fusion.node1.hostname,fusion.node2.hostname hadoop.kms.proxyuser.USERNAME.groups=* hadoop.kms.proxyuser.USERNAME.users =*

In the dbks-site configuration, the parameter hadoop.kms.blacklist.DECRYPT_EEK exists. Ensure this does not contain the username that Fusion uses (after auth_to_local translation).

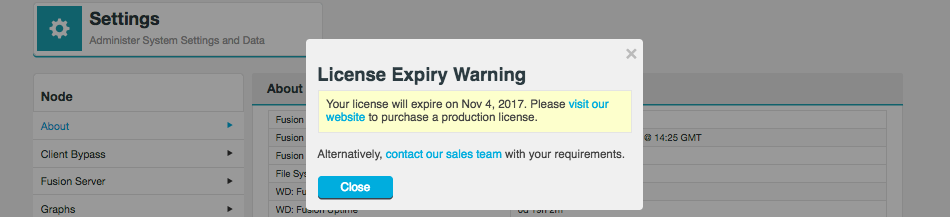

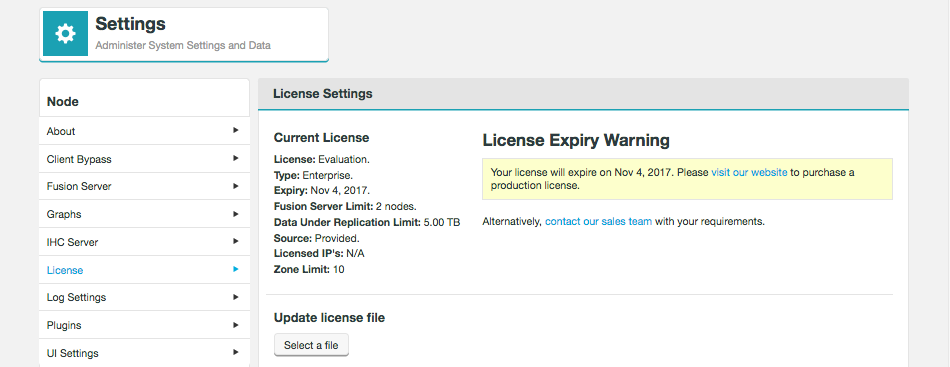

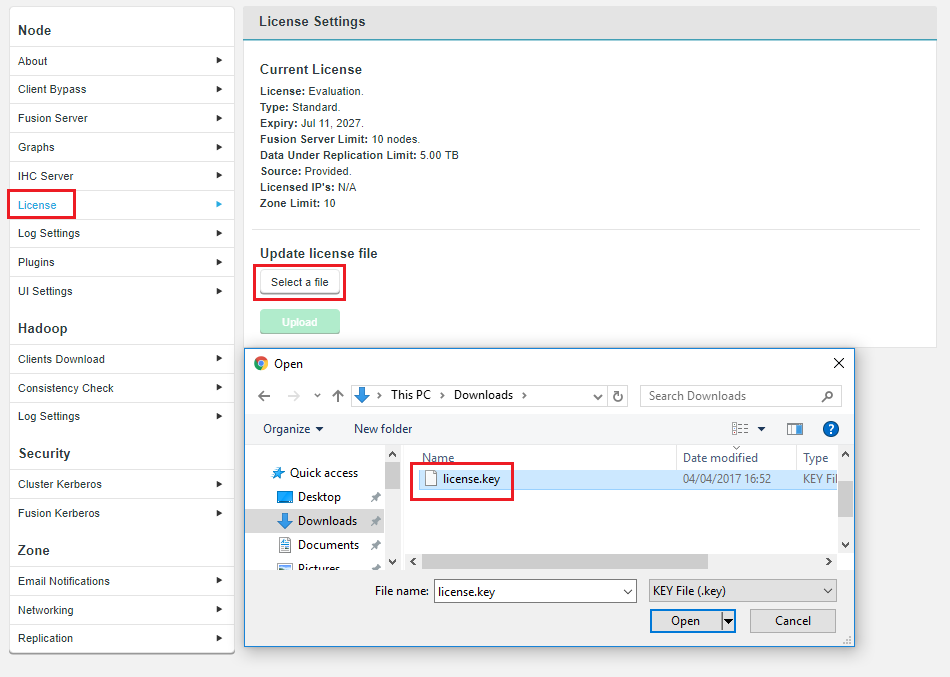

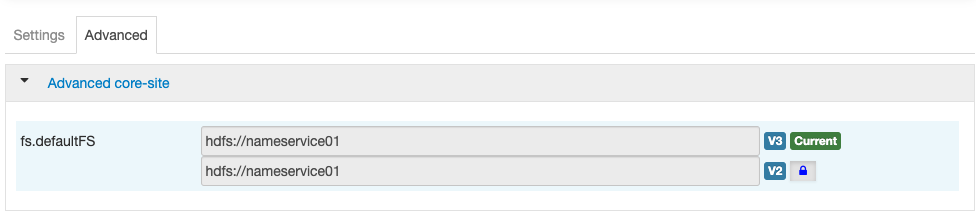

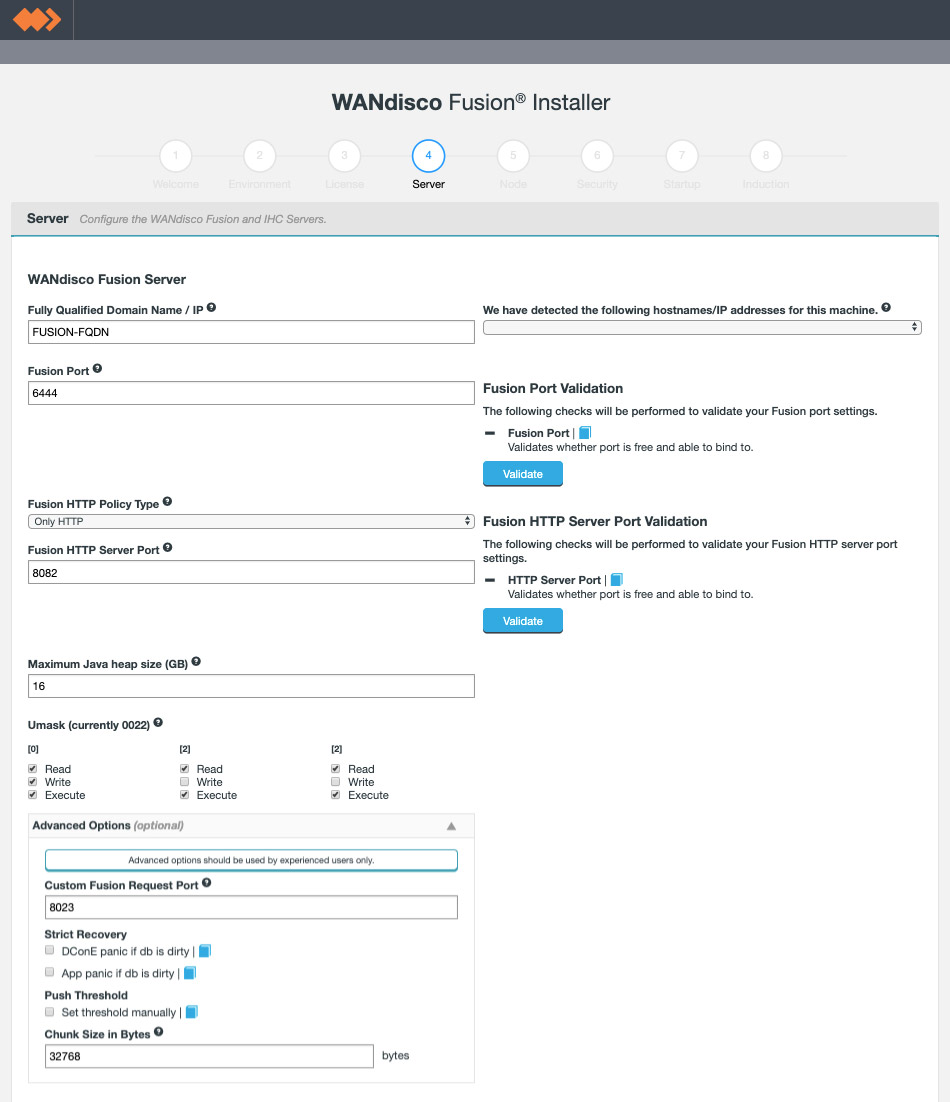

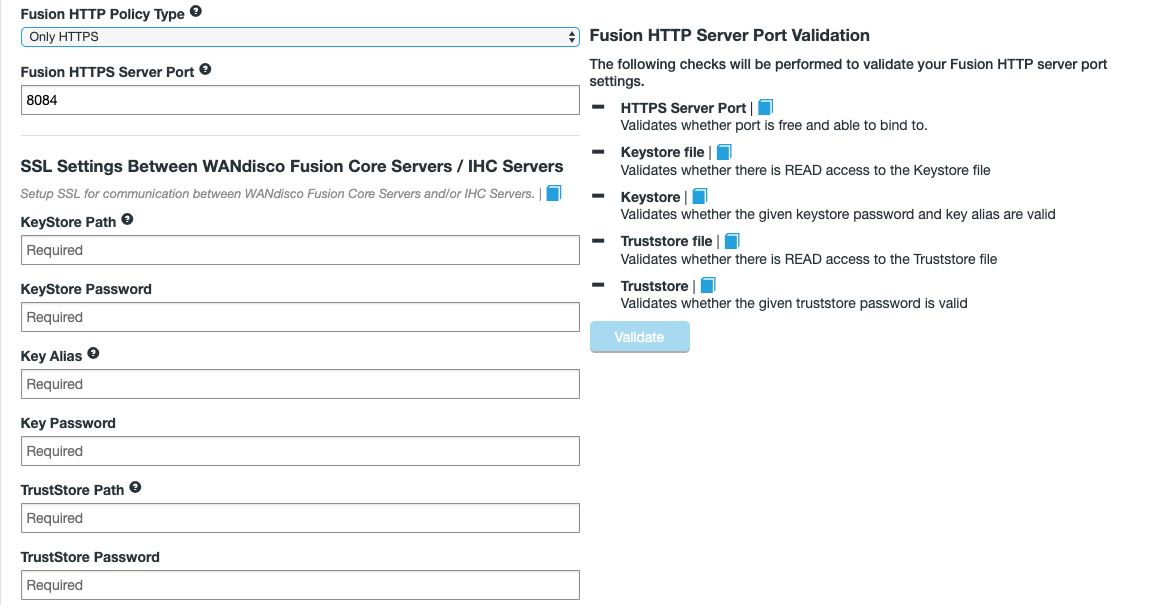

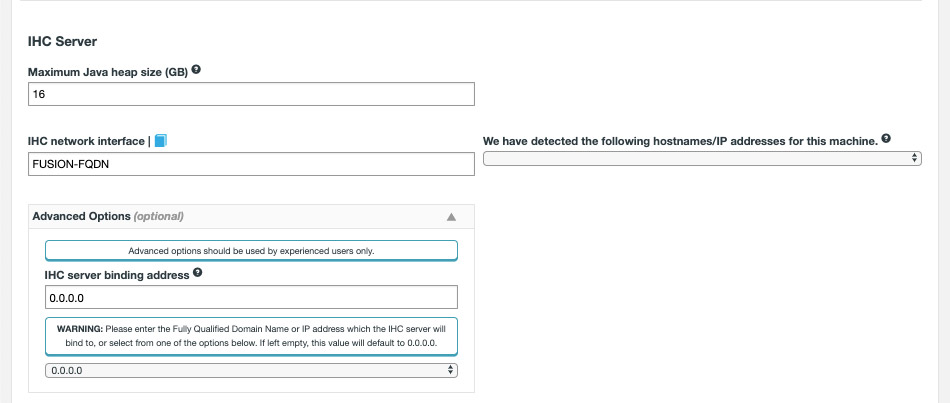

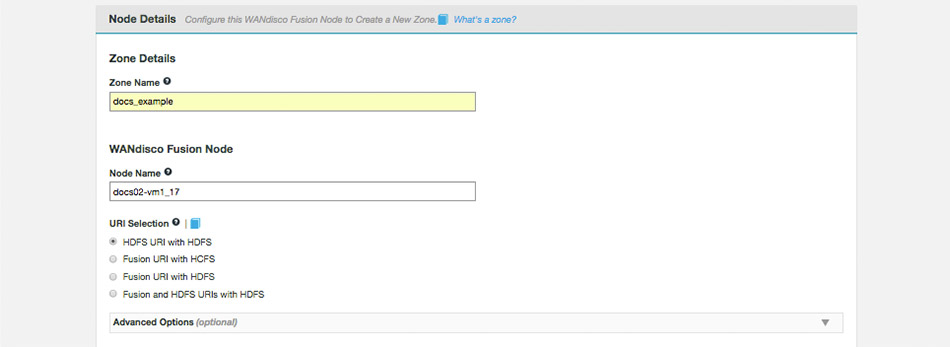

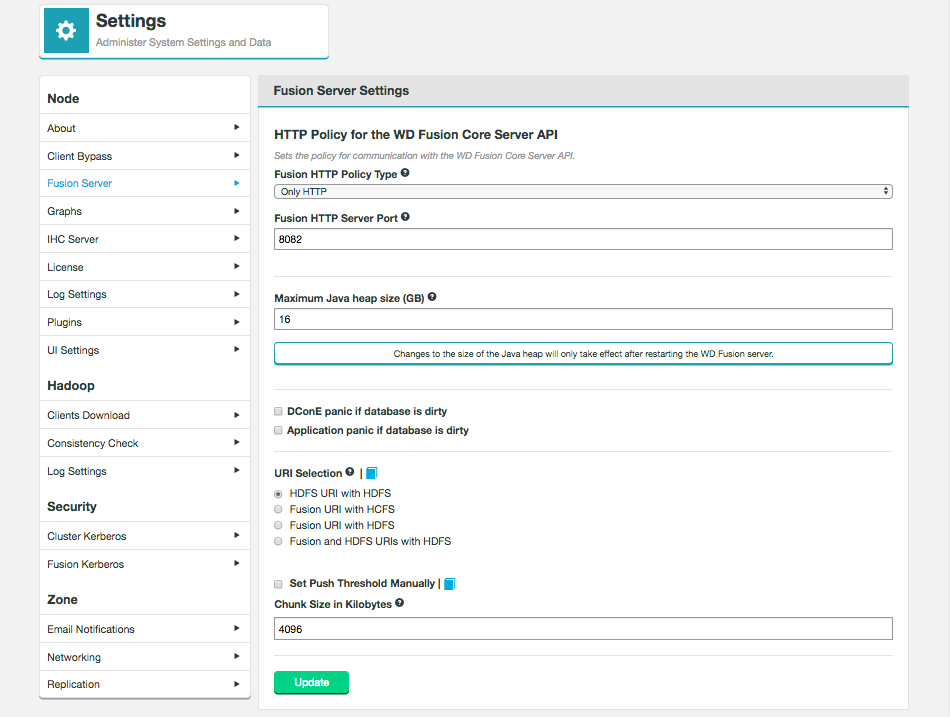

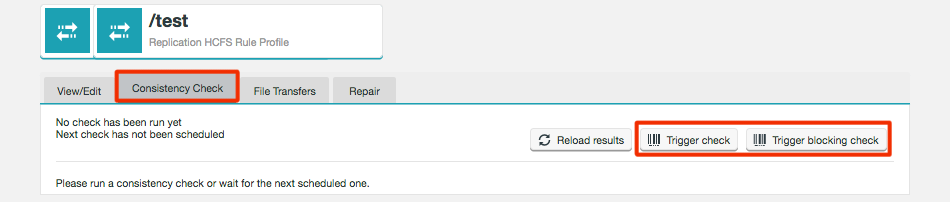

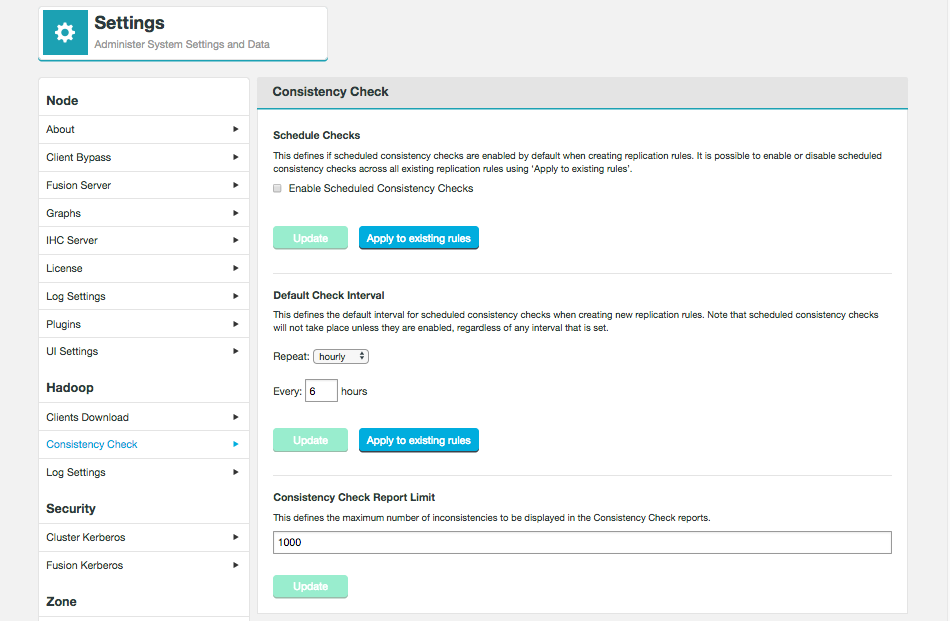

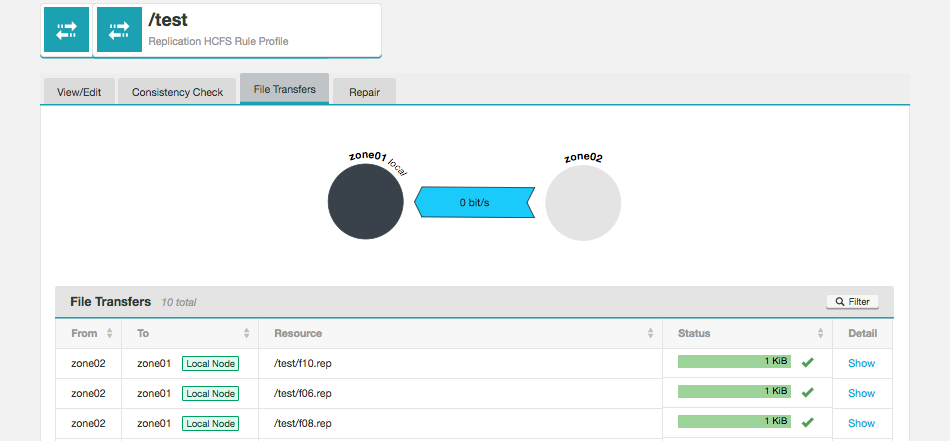

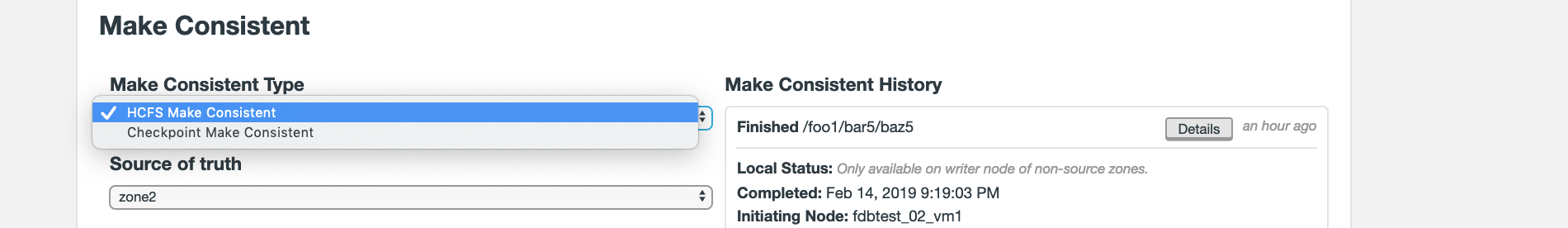

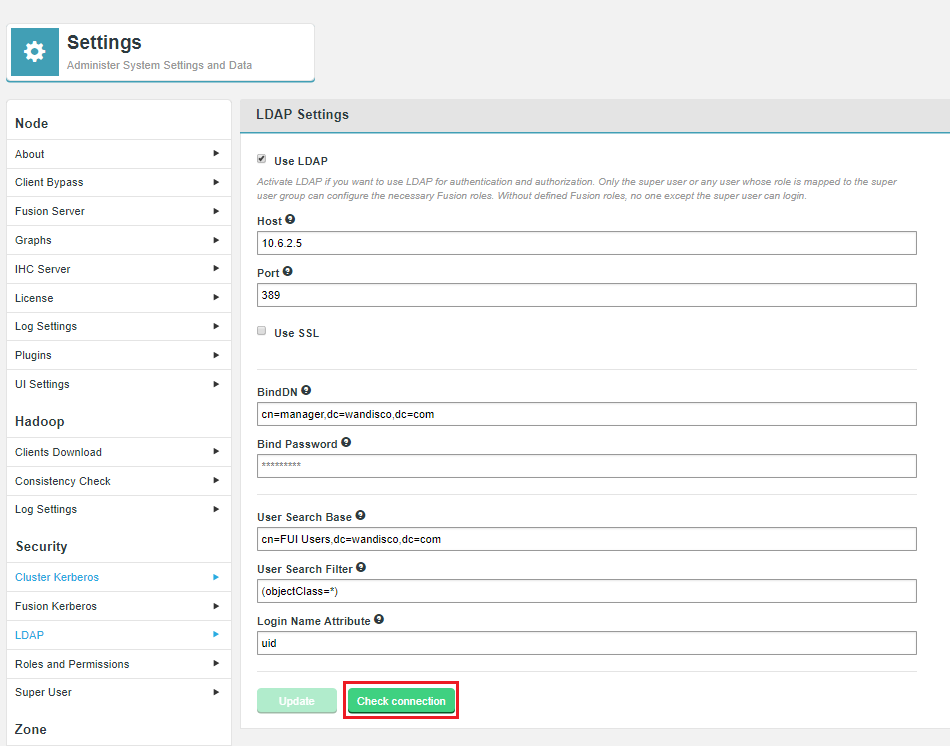

In the KMS ACLs, such as using Ranger KMS, ensure that the Fusion user (after auth_to_local translation) has "Get Metadata" and "Decrypt EEK" permissions to keys.